Search engines answer questions. AI assistants help people think. Two thirds of users are not looking for products at all.

We spent twenty years training ourselves to think in search queries. Short keyword phrases. Blue links. Click through to find the answer. That mental model shaped how we build websites, write content, and measure success online.

AI assistants work differently. We analyzed millions of real conversations between humans and chatbots. What we found is that people are not searching. They are thinking out loud. They bring problems, not keywords. They want guidance, not links.

This post breaks down what we learned from our 4.5M conversation dataset alongside OpenAI's own research into ChatGPT usage patterns. We will look at how people actually use AI assistants, what commercial opportunities exist within those conversations, and what this means for your content strategy moving forward.

AI Chatbot Adoption

OpenAI released detailed research on ChatGPT usage patterns in September 2025. Over 800 million people now use ChatGPT every week. They send 20 billion messages. That represents one in ten adults on the planet having regular conversations with an AI assistant.

The growth came from an unexpected direction. Personal use expanded from just over half of all messages to nearly three quarters in a single year. Professional use cases still exist. They just got overtaken by people using AI in their daily lives outside of work.

This shift happened within existing user groups too. The same people who started using ChatGPT for their jobs began turning to it for personal tasks. Cooking advice. Travel planning. Homework help for their kids. The tool expanded from a productivity enhancement into something closer to a thinking companion.

How People Use ChatGPT

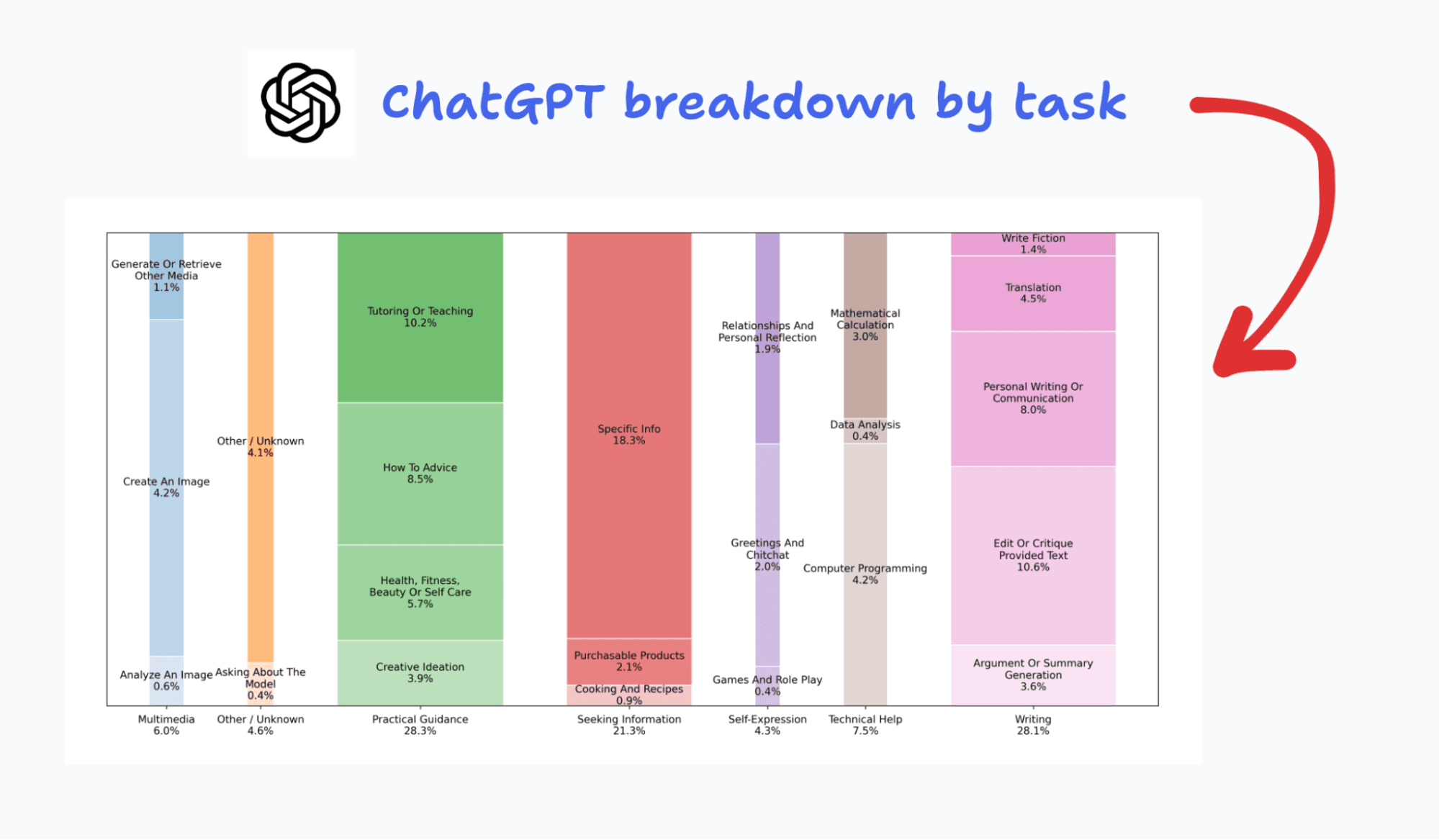

OpenAI grouped conversations into categories and found that three types of activity account for four out of every five messages.

Practical guidance leads at 29% of messages. People ask for advice on how to approach a problem. Questions like "how should I handle this situation with my landlord" or "what is the best way to organize my kitchen renovation."

Information seeking grew fastest over the past year. It doubled from 14% to 24% of all conversations. These are questions that would traditionally go to Google. But the way people phrase them differs from search queries. They ask for context and explanation, not just links to click.

Writing assistance dropped from 36% down to 24%. This category still matters, especially in professional settings. But its dominance is fading as people discover other ways to use their AI assistant.

What professionals actually do with ChatGPT

Within workplace contexts, writing remains the primary activity. 40% of work-related messages involve some form of text generation or manipulation. Two thirds of that writing activity involves editing, refining, critiquing, translating, or summarizing existing content. Very few professionals ask ChatGPT to draft something from a blank page.

This pattern reveals how AI fits into real workflows. People do not delegate entire projects to their assistant. They bring their own work and ask for help improving it. The AI acts as a collaborator rather than a replacement.

The categories that get outsized attention

Media coverage of AI chatbots tends to focus on two use cases that barely register in actual usage data.

Programming accounts for just 4.2% of all messages. Developers use ChatGPT. It can help with code. But the programmer demographic is tiny compared to the general population. Most humans never write code and do not intend to start.

Companionship and roleplay conversations represent only 2.4% of activity. The stories about AI girlfriends and emotional support bots generate attention. They do not reflect how most people interact with these tools.

Asking vs Doing vs Expressing

OpenAI introduced a simpler framework for categorizing what users want from their conversations. They identified three core intentions.

Asking describes users seeking information or guidance. This represents 49% of all interactions. People want to understand something. They have questions. They need context to make decisions.

Doing covers situations where users want the AI to produce something concrete. This accounts for 40% of activity. Write this email. Create this image. Generate this code. Something tangible that can be used directly.

Expressing captures emotional and social interactions at 11% of usage. Venting frustrations. Casual conversation. Processing feelings through dialogue.

The trend line matters more than the current snapshot. Asking is growing faster than Doing. Users appear to be shifting from "make this thing for me" toward "help me think through this problem." They want partnership in reasoning, not just production of outputs.

Quality metrics reinforce this shift. Conversations centered on Asking score higher on user satisfaction than those focused on Doing. People feel more helped when the AI guides their thinking than when it simply executes a task.

Commercial User Intents

We ran our own analysis on over 50,000 categorized conversations to understand commercial behavior.

Almost two thirds of AI conversations involve zero commercial intent. People are not shopping. They are not researching products. They are not comparing brands. They are thinking, creating, learning, and chatting.

The remaining third contains some signal of commercial interest. But that signal ranges from extremely vague awareness to active purchase support. Most of it clusters at the top of the traditional marketing funnel.

Where commercial conversations happen

Awareness-stage activity makes up 10% of all conversations. Users explore problem spaces before they even know what kind of product might help. Someone asks "my back hurts when I sit at my desk all day" or "what types of project management tools exist." They have not started looking for specific products yet. They want to understand their situation first.

Comparison and evaluation behavior represents 8% of sessions. These users are actively weighing options. They ask questions like "Slack vs Teams vs Discord for a small team" or "is Dyson worth the price" or "will this RAM work with my motherboard." This stage offers the clearest opportunity for commercial content to add value.

Post-purchase conversations at 5% actually exceed pre-purchase transaction support. More people turn to AI after buying something than during the purchase itself. They ask "how do I configure my new router" or "my new espresso machine is leaking" or "what features of Notion am I probably not using." Setup, troubleshooting, and getting more value from existing purchases.

Product and brand discovery accounts for 4.1% of activity. These conversations involve explicit searches for solutions. "What accounting software works best for freelancers" or "who makes running shoes for flat feet."

Opinion seeking and decision validation represents the smallest commercial category at 3%. Users who have narrowed down their options ask for final input. "Should I get the Pro or the base model." "Fixed vs variable rate mortgage right now." "Should I buy now or wait for Black Friday."

The large bucket of non-commercial activity

A quarter of all conversations did not fit neatly into our classification system. This "other" category likely contains attempts to manipulate the AI, roleplay scenarios, highly technical requests, and conversations too ambiguous to categorize.

The remaining non-commercial activities break down in interesting ways.

Brainstorming at 8% involves idea generation and creative problem solving. People use AI as a sounding board for naming projects, exploring possibilities, and working through challenges.

Planning at 6% covers organizational tasks. Building schedules. Mapping out travel itineraries. Structuring projects and setting goals.

Conversation at 6% represents purely social interaction. Emotional processing. Casual chat. Entertainment through dialogue. People treat AI as something between a friend and a therapist.

Analysis at 6% involves working through data, calculations, and complex decisions that have no commercial dimension. Personal dilemmas. Ethical questions. Mathematical problems.

Learning at 5% describes tutoring and educational support. Explaining concepts. Helping with homework. Preparing for exams.

Transformation at 5% covers reformatting existing content. Summarizing long documents. Translating between languages. Adjusting tone and style.

Creation at 4% involves generating new content from scratch. Writing projects. Document templates. Code development.

Brainstorming and planning together account for 14% of all conversations. Users treat AI as a thinking partner for creative and organizational tasks.

That last number deserves attention. Pure creation represents less than 4% of conversations. The narrative around AI replacing human creators focuses on a tiny slice of actual usage. Most people bring their own content and ask for help transforming it.

How Conversations Actually Flow

The structure of typical AI conversations tells us something about user expectations. Most exchanges are remarkably brief.

The average conversation runs 5 turns, but the median is just 2. That gap reveals the distribution. Most people ask a single question and receive a single response. Task complete. They got what they needed and moved on. A smaller group of users have extended back-and-forth exchanges that pull the average up.

Word counts show the same pattern. The average conversation runs 700 words, but the median is closer to 400. A third of conversations fall between 100 and 500 words total. Another fifth contain fewer than 100 words. These are quick transactions. A specific question meets a direct answer.

Eight out of ten conversations stay under 1,000 words. Only a small fraction extend into longer exchanges. Those extended conversations likely represent complex tasks. Detailed document editing. Multi-step code problems. Extended tutoring sessions.

The ratio of user input to assistant output reveals another pattern. Most users contribute only a sixth of the conversation content. They receive five times more than they give. This asymmetry reflects the fundamental dynamic. Short prompts generate lengthy responses. Questions yield explanations. The AI does most of the talking.

The typical user message is only 300 characters. But the average user message runs over 2,000 characters. That is a 6x difference between median and mean. What explains this gap? Document pasting. A subset of users copy entire articles, reports, or code files into the chat for summarization or analysis. These heavy users shift the aggregate statistics significantly. When you add up all content across all conversations, users contribute 40% of total characters. But the per-session median tells a different story.

Who Uses ChatGPT

The demographic profile of ChatGPT users shifted significantly over the past year. Early adoption skewed heavily male. By mid-2025, usage had reached rough gender parity with a slight tilt toward users with feminine names.

Age distribution concentrates heavily in younger groups. Nearly half of all adult messages come from users under 26. This generation grew up with search engines. They are learning AI assistants as a distinct tool with different strengths.

Geographic growth patterns favor developing economies. The fastest expansion is happening in low and middle income countries. AI assistants may leapfrog traditional internet tools in regions with less established digital infrastructure.

Professional usage tracks with education and job type. Workers in knowledge-intensive roles use ChatGPT more than those in manual or service positions. Within professional contexts, users emphasize asking over doing. They want analysis and decision support. They use AI to think through problems rather than simply produce deliverables.

Optimizing Your Content Strategy

These findings point toward a different approach to AI optimization. The mental model borrowed from search engine optimization does not transfer well.

Search engines answer the question "what exists." AI assistants help with the question "what should I do." The difference matters enormously for content strategy.

When someone searches Google for "best project management software," they want a list of options with links to learn more. When someone asks ChatGPT the same question, they want guidance on how to choose. They want the assistant to understand their constraints and recommend accordingly.

Implications for commerce and affiliate content

Commercial opportunity exists within AI conversations. But the opportunity is narrower and more nuanced than search traffic.

Combined awareness and consideration activity represents one fifth of sessions. These users would benefit from well-structured product information. But they want that information synthesized and contextualized for their specific situation. Not just presented as options to evaluate.

Post-purchase support is genuinely underserved. More than 5% of conversations involve users trying to get value from things they already bought. Content that helps people set up, troubleshoot, and maximize their purchases could capture significant AI attention.

Implications for product development

Teams building AI-enhanced products should recognize what users actually want. Nearly two thirds of activity falls into non-commercial productivity and creativity.

The core use cases cluster around thinking tasks. Brainstorming. Planning. Analysis. Learning. People want help organizing their thoughts and developing their ideas. They want a partner in cognition.

Content generation ranks surprisingly low. Users seek assistance more than finished products. They want to stay in control of the creative process while getting help with specific challenges.

Implications for content creators

Optimize for questions, not keywords. AI users arrive with problems to solve. Your content should anticipate the questions they will ask and provide frameworks for thinking through decisions.

Build for workflows, not browsing. AI assistants function as tools within larger processes. Structure your content to support specific jobs people are trying to accomplish. Think about the before and after of each interaction.

Recognize different user needs. Managers want help with communication and coordination. Technical users want integration with their development environments. Analysts want structured outputs they can use in their work. Generic content performs worse than targeted solutions.

Key Observations

Five patterns capture the essential reality of AI assistant usage today.

-

Interactions are transactional by nature. Two-turn conversations dominate. Users know what they need and get it efficiently.

-

AI handles most of the communication. Assistants generate 60% of conversation content. The asymmetry reflects the fundamental purpose. Users ask. AI explains.

-

Complexity concentrates in a small tail. Extended sessions represent less than 5% of activity. But they likely contain the highest-value use cases. Document processing. Extended tutoring. Complex problem solving.

-

Document processing is common. The gap between average and median user input suggests many people paste existing content for summarization, analysis, or transformation.

-

User satisfaction is improving. By mid-2025, positive-rated responses outnumber negative-rated responses by more than four to one. Users are getting better at prompting. Models are getting better at responding. The match between expectations and outputs continues to improve.

AI assistants are thinking tools, not search engines. Content strategy that recognizes this distinction will be better positioned to serve users in this new paradigm.

OpenAI published their full "How People Use ChatGPT" whitepaper at cdn.openai.com.

Related Posts

December 13, 2025

How ChatGPT memory works, reverse engineered

Reverse engineering ChatGPT Memories reveals it does not use RAG or vector databases. It uses: metadata, facts, conversation summaries, and a sliding window.

December 10, 2025

33 key terms you need to know for AI SEO in 2026

Comprehensive glossary of 33 essential terms for AI SEO in 2026. From GEO and AEO to citations and fan-out queries, learn the vocabulary that defines modern search optimization.

December 3, 2025

Why off-site SEO matters in GEO & AI search

Generative answer engines discover pages through traditional search results. This makes off-page SEO your best lever for visibility in ChatGPT and other AI search platforms.

November 26, 2025

OpenAI is quietly building a hidden cached index for ChatGPT Search

OpenAI maintains a hidden cached index of webpages and search grounding results for ChatGPT web search. How to test if your pages are indexed using the Web Search API.