Free GEO Tool

Query Fan-Out Generator

Generate fan-out queries for user prompts with our QFO simulator. See the hidden sub-queries AI search engines use to find and cite your content.

Trusted by SEO's, Founders & Industry Leaders

Discover the hidden searches behind every AI answer

When users ask Google AI Mode, ChatGPT, or Perplexity a question, these systems do not run a single search. They use a technique called query fan-out to break the prompt into multiple specialized sub-queries. Each sub-query searches for different information, and the results are synthesized into a single answer. Your content might rank well for the original prompt but be invisible to the sub-queries that actually determine which sources get cited. The Query Fan-Out Generator by LLMrefs simulates this process so you can see exactly what queries AI systems generate behind the scenes. Enter any prompt your target audience might ask, and the tool reveals the sub-queries you need to optimize for to appear in AI-generated answers.

How to Use the Query Fan-Out Generator

Step 1

Enter a user prompt

Paste the long, unformatted prompts users would type when asking ChatGPT or Google AI Mode a question. Use real prompts you want your brand to appear for.

Step 2

Select an AI model

Choose which AI search engine to simulate. Each platform uses different fan-out strategies and adds different modifiers to its sub-queries.

Step 3

Analyze the fan-out queries

Review the sub-queries the AI would send to search engines. Click the Google or Bing buttons to see which pages currently rank for each query and identify content gaps.

What Is Query Fan-Out?

Query fan-out is the process where AI search engines break down a single user prompt into multiple sub-queries, run those searches simultaneously, and combine the results into a comprehensive answer. Instead of matching your exact words to a webpage, the AI searches for many related queries and synthesizes information from across all of them.

Google introduced the term "query fan-out" when launching AI Mode in 2025. At Google I/O, Head of Search Elizabeth Reid explained how the process works.

"AI Mode uses our query fan-out technique, breaking down your question into subtopics and issuing a multitude of queries simultaneously on your behalf. This enables Search to dive deeper into the web than a traditional search on Google."

The technique is not unique to Google. ChatGPT Search, Perplexity, Gemini, and Claude all use similar approaches when they need to gather information from the web to answer questions.

Why did Google create query fan-out?

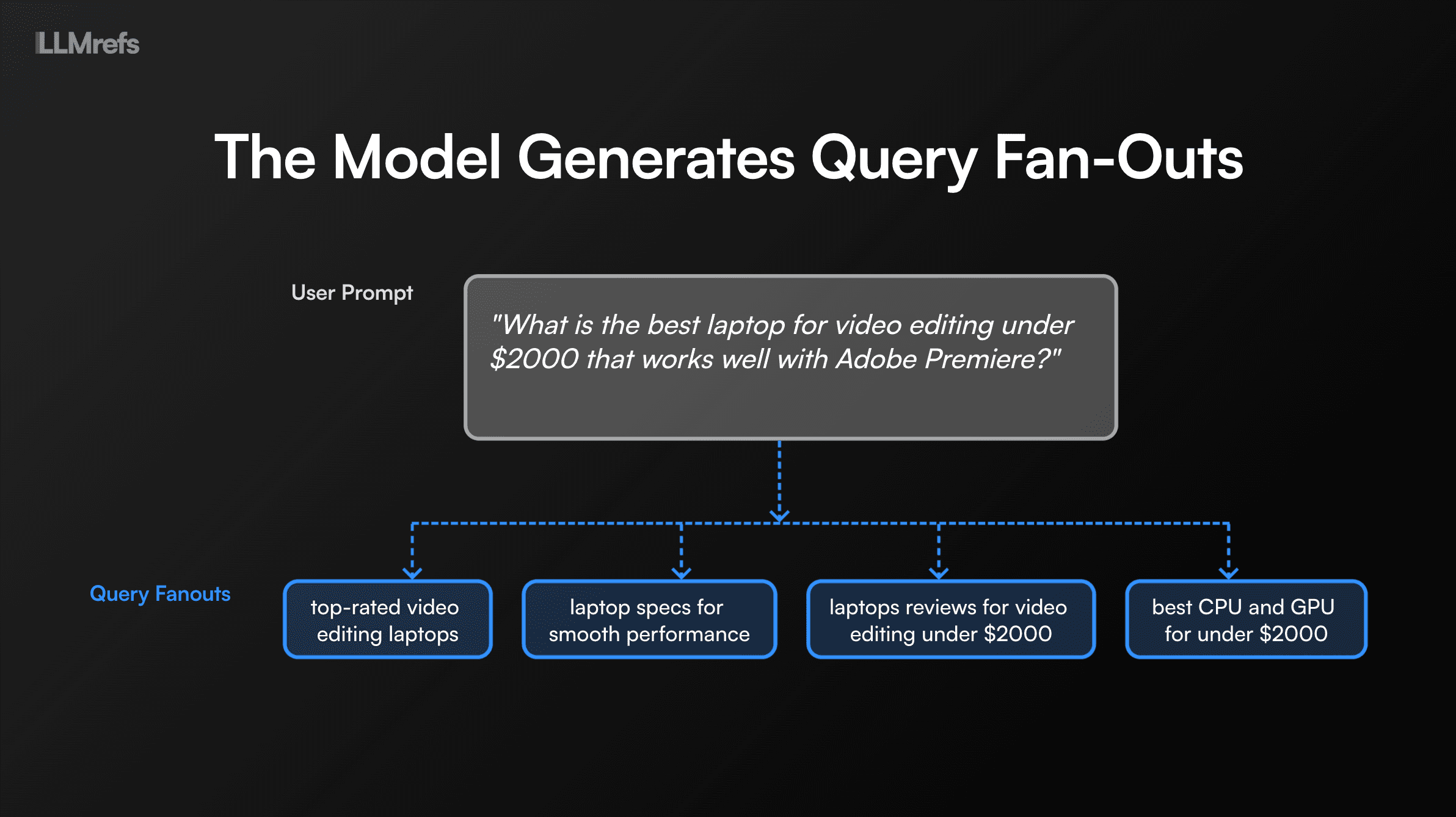

Traditional search works well for simple queries like "weather in Tokyo" or "capital of France." But users increasingly ask complex, multi-part questions that no single webpage can fully answer. Think about a query like "What is the best laptop for video editing under $2000 that works well with Adobe Premiere?"

No single page addresses every aspect of that question. The user wants to know about video editing laptops, price constraints, GPU requirements, software compatibility, and likely brand comparisons. Query fan-out lets AI Mode search for all of these facets simultaneously and build an answer from multiple authoritative sources.

How query fan-out works

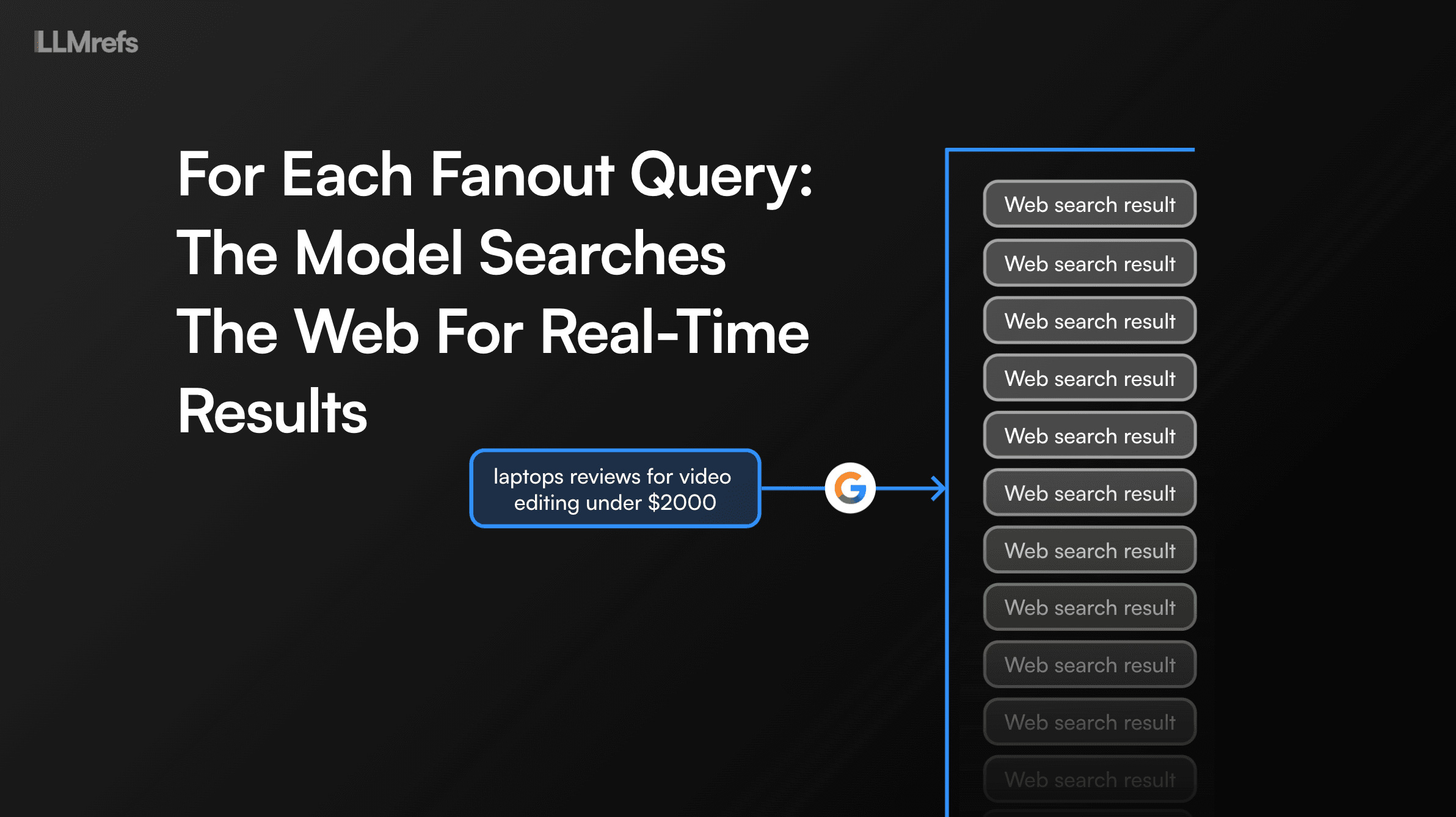

When a user submits a complex prompt, the AI search engine follows a multi-step process before generating a response.

-

Intent analysis. The AI analyzes the prompt to understand what the user really wants. It identifies the domain, task type, and implicit needs the user did not explicitly state.

-

Query decomposition. The system generates multiple sub-queries, each targeting a different facet of the question. A single prompt might spawn 5 to 20 or more sub-queries.

-

Parallel retrieval. All sub-queries are searched simultaneously across the web, knowledge graphs, shopping databases, and other specialized sources. Different sub-queries may be routed to different indexes based on what type of information they need.

-

Chunk selection. The AI evaluates results from all searches and selects the most relevant passages or "chunks" from each page. It does not use entire pages. It extracts specific sections that answer specific sub-queries.

-

Synthesis. The system combines chunks from multiple sources into a coherent answer, citing the pages it used.

This is fundamentally different from traditional search, where one query returns one set of ranked results based on keyword matching and link authority.

Query fan-out example

Imagine a user asks Google AI Mode "What is the best laptop for video editing under $2000?"

In traditional search, Google would find pages that match those keywords and rank them. In AI Mode, the system fans out into multiple sub-queries to gather comprehensive information.

- "best laptops for video editing 2026"

- "laptops with dedicated GPU under $2000"

- "video editing laptop requirements CPU RAM GPU"

- "MacBook Pro vs Windows laptop video editing"

- "Adobe Premiere laptop recommendations"

- "DaVinci Resolve system requirements laptop"

- "best value video editing laptops reviews"

Each sub-query retrieves different content from different sources. The AI then synthesizes all those results into a single answer that addresses the question from multiple angles. The websites cited in the final answer may be completely different from those that rank on page one for the original query.

Here is another example. If a user asks "What espresso machine should I buy for my home coffee bar?", the fan-out might include these sub-queries.

- "best home espresso machines 2026"

- "espresso machine with built-in grinder reviews"

- "Breville vs Gaggia vs De'Longhi espresso comparison"

- "beginner espresso machine recommendations"

- "semi-automatic espresso machine under $1000"

- "espresso machine milk steaming capability"

- "maintenance requirements home espresso machines"

- "compact espresso machines small kitchen"

The AI anticipates what the user might want to know next, even if they did not explicitly ask. This is called latent intent projection. The system predicts follow-up questions and searches for those answers proactively.

Why Query Fan-Out Matters for SEO

Query fan-out changes how websites appear in AI search results. Understanding this technique is essential for any SEO working on AI visibility.

Traditional search versus query fan-out

In traditional search, you optimized for specific keywords. If you ranked well for "best laptops for video editing," you appeared in those results. One query, one set of rankings.

With query fan-out, your content competes across multiple sub-queries at once. You might rank for none of the individual sub-queries but still appear in the final answer if your content comprehensively addresses multiple facets. Or you might rank first for the main query but get overlooked because competitors better answer the sub-queries the AI actually uses to gather information.

This is a critical insight. Your traditional rankings do not guarantee AI visibility. The AI is not looking at who ranks number one for the original query. It is looking at who best answers each of the 10 to 20 sub-queries it generated.

Different websites appear in AI Mode

Google has confirmed that AI Mode shows different results than traditional search. Because the system synthesizes information from multiple sub-queries, the websites that appear in AI answers are often different from those that rank in regular search results.

In testing, researchers found that the order of sources in AI Mode rarely matches the ranking order in traditional search. A competitor with better coverage of related subtopics might get cited instead of you, even if you outrank them for the main keyword.

The shift from keywords to topic coverage

Query fan-out forces a fundamental shift in content strategy. You can no longer optimize for a single keyword and call it done. You must cover entire topic clusters comprehensively to appear across multiple branches of the fan-out tree.

If you only answer the main query, you are competing for one branch. To consistently appear in AI answers, you need content that addresses many branches. This is why building comprehensive content hubs matters more than ever for AI search visibility.

Search grounding and why fan-out queries matter

AI search engines use a process called search grounding to verify and source information. When answering a question, the AI pulls the top results from each fan-out query into its context window. This is where it gets the data to build its response.

To increase your visibility in AI answers, you should have pages ranking for as many fan-out queries as possible for the prompts you want to influence. Each fan-out query is an opportunity to get your content pulled into the AI system. The more queries you rank for, the more chances your content has to be included in the synthesis process.

Ranking matters, but not like you think

Higher rankings are better for AI visibility, but ranking number one does not guarantee you will appear in the AI answer. The AI synthesizes information from multiple sources across multiple queries. A competitor who ranks well across many fan-out queries may beat you even if you rank first for the main keyword.

The reverse is also true. You can still appear in AI answers even if you do not rank on page one for any individual query. AI systems pull the first several pages of results for each fan-out query into their context. If your content appears on page two or three for multiple sub-queries, you still have a chance to be cited.

Building consensus across the web

AI search is about building consensus. The more pages across each fan-out query that mention your brand, the more likely the AI is to recommend you. This applies to pages on your own domain and pages on other domains.

Think about it from the AI perspective. When synthesizing an answer about "best project management tools," the system searches 10 to 20 sub-queries. If your brand appears in the results for most of those sub-queries, whether on your own site, review sites, comparison articles, or industry publications, the AI sees a pattern. Your brand keeps appearing as relevant to this topic.

This is fundamentally different from traditional SEO, where ranking on your own domain was the primary goal. For AI visibility, third-party mentions and citations matter just as much. The AI is looking for consensus across the web, not just who ranks first on their own site.

Optimizing for the unknown

One of the biggest challenges with query fan-out is that you are optimizing for queries you cannot see. Google does not share which sub-queries it generates from a given prompt. The fan-out happens behind the scenes.

As one SEO expert put it: "We are now dealing with the unknown. We are optimizing for the unknown."

This is why tools that simulate query fan-out are valuable. They help you anticipate what sub-queries AI systems might generate so you can create content that addresses them.

How to Optimize for Fan-Out Queries

Optimizing for query fan-out requires thinking about your content differently than traditional keyword optimization.

Identify core topics to build visibility around

Begin by mapping the topics where you want AI systems to recognize your expertise. Focus on subjects where you have genuine authority and where appearing in AI answers would drive business value. These become your priority optimization targets.

Review your existing AI visibility data to understand which prompts already surface your brand and which ones feature competitors instead. This gap analysis shows you where to focus your content efforts.

Build comprehensive topic clusters

Topic clusters are groups of interlinked pages that cover a subject from every angle. You need a central pillar page that provides a broad overview plus multiple supporting pages that dive deep into subtopics.

When an AI system fans out a query about your topic, you want it to find your content across multiple sub-queries. The more branches of the fan-out tree you cover, the more likely you are to appear in the synthesized answer.

Think about a user asking "how do I start investing in index funds?" The system might fan out to include sub-queries about brokerage account comparisons, expense ratios explained, tax-advantaged accounts, automatic investing features, and common beginner mistakes. If your investing guide only explains what index funds are, you miss opportunities. If your site also has pages comparing brokerages, explaining fees, discussing IRAs, and warning about pitfalls, you might appear in multiple parts of the answer.

Anticipate follow-up questions and latent intent

AI systems do not just decompose the original query. They also predict what users might ask next based on similar past sessions. Your content should naturally lead readers through these follow-up questions and answer them proactively.

Think about the questions someone would logically ask after getting an initial answer. If someone asks about training plans, they will likely also want to know about gear. If someone asks about project management software, they will likely also want to know about pricing, integrations, and team size limits.

Since AI systems extract specific chunks rather than entire pages, structure your content so each section can stand alone as a citation. Use descriptive headings, short paragraphs, and clear formatting to make extraction easier.

Query Fan-Out in Google AI Mode

Google AI Mode uses query fan-out as its core retrieval mechanism. When you search in AI Mode, you might see the system display "Searching 8 queries" or similar as it works.

In November 2025, Google announced significant improvements to the fan-out process alongside the launch of Gemini 3. According to Google, "Now, not only can it perform even more searches to uncover relevant web content, but because Gemini more intelligently understands your intent it can find new content that it may have previously missed."

The key difference between AI Mode and AI Overviews is the depth of fan-out. AI Overviews use a lighter version of the technique for quick answers to straightforward questions. AI Mode uses aggressive fan-out to provide comprehensive responses to complex, multi-faceted questions.

AI Mode also supports visual fan-out. When users search with images, the system decomposes the visual into multiple facets like style, color, material, and context, then searches for each facet separately.

Query Fan-Out in ChatGPT Search

ChatGPT Search decomposes user prompts into multiple queries and sends them to Bing. You can sometimes see this happening when ChatGPT displays "Searching..." followed by multiple search terms.

When ChatGPT decomposes a prompt, it often adds commercial and temporal modifiers to the sub-queries. You will frequently see additions like "best," "top rated," "reviews," "comparison," and the current year appended to searches. A question about CRM software might become "best CRM software for small business 2026" and "CRM software pricing comparison" among other variations.

These patterns reveal how ChatGPT interprets user intent. It assumes users want current, vetted options even when they do not explicitly say so. Knowing this helps you structure content that matches the actual queries ChatGPT sends to Bing.

The more fan-out queries your content can satisfy, the more likely ChatGPT is to cite you in its response.

Query Fan-Out in Perplexity

Perplexity shows its fan-out process more transparently than other AI search engines. When you ask a question, Perplexity displays the sources it searched and how it combined information from each.

Perplexity uses an aggressive fan-out strategy, often searching 10 or more sources for a single question. It then cites each source directly in its response with inline links, making it clear which information came from where.

This transparency makes Perplexity useful for understanding how your content performs across different aspects of a topic. You can see exactly which sources get cited for which parts of an answer.

Track Real Fan-Out Queries With LLMrefs

The Query Fan-Out Generator simulates sub-queries to help you anticipate what AI systems might search. But to see the actual fan-out queries generated by real AI search engines, you need AI visibility monitoring.

LLMrefs tracks your brand across 10+ AI search platforms including ChatGPT, Google AI Mode, Perplexity, Gemini, and Claude. When you set up AI tracking, you see the real prompts users ask and the actual sub-queries each platform generates. This shows you exactly which fan-out queries your content appears in and where competitors are beating you.

More AI SEO tools you might love

Frequently Asked Questions

Increase your brand's AI search visibility

Join 10K+ marketers tracking using LLMrefs to track AI SEO and optimize their content for AI search.