real time data analytics, streaming data, data architecture, business intelligence

A Guide to Real Time Data Analytics

Written by LLMrefs Team • Last updated December 4, 2025

Real-time data analytics is all about making sense of data the instant it's created. It’s the difference between watching a live football game and just reading about the final score the next day. One lets you see the action unfold and react to every play, while the other is simply a look back at what already happened.

This shift from hindsight to real-time insight is a massive advantage for any modern business, delivering actionable intelligence when it matters most.

The Power of Now in Data Analytics

In a world where speed is everything, waiting for information just doesn't cut it anymore. The old way of doing things, known as batch processing, involves collecting huge amounts of data over time—say, a full day's worth of sales—and then running an analysis on the entire chunk. It's perfectly fine for things like a quarterly business review, but it's completely useless for knowing what a customer is doing on your website right now.

That's where real-time data analytics comes in. Instead of looking in the rearview mirror, it puts you in the driver's seat by analyzing continuous streams of data as events happen. This gives businesses the power to jump on opportunities or shut down threats in milliseconds, not hours. For a deeper dive into the principles of analyzing data as it's generated, this guide on Mastering Real Time Data Analytics is a great resource.

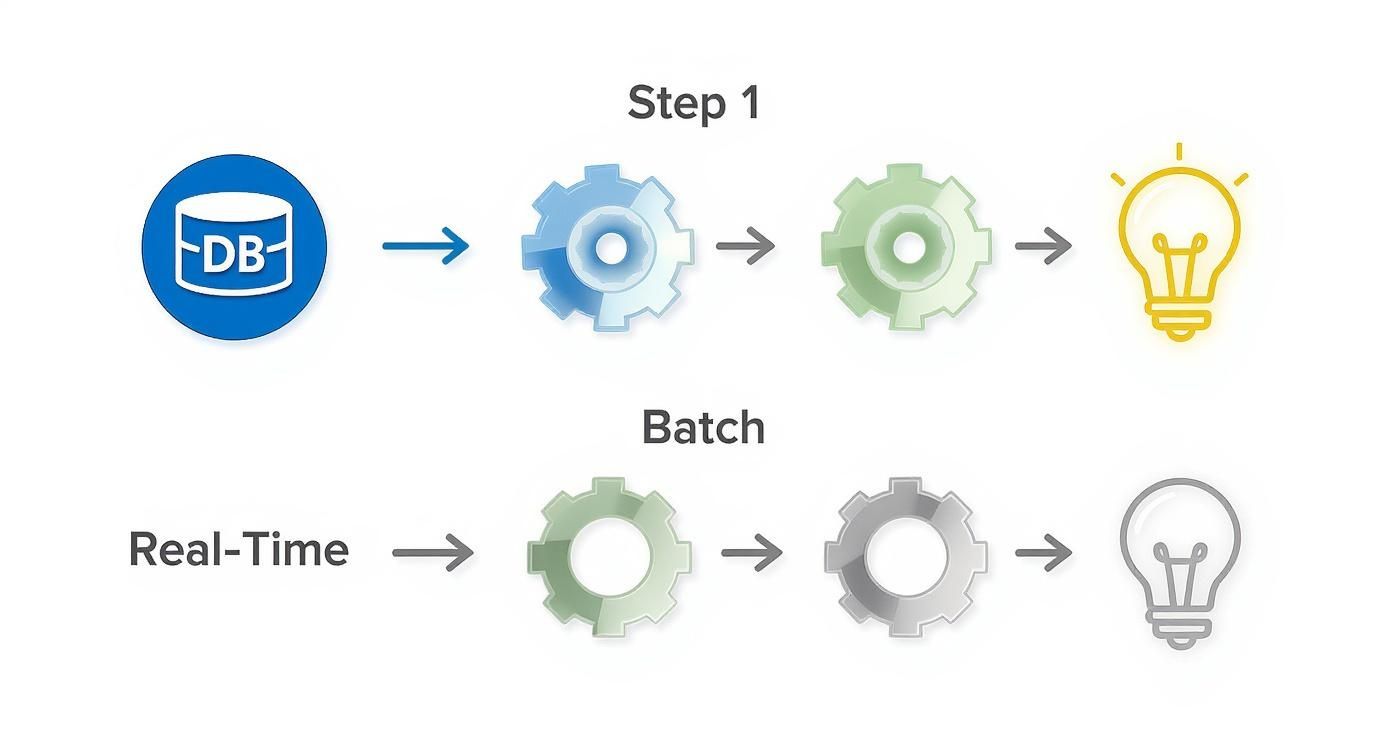

Real-Time Versus Batch Processing

The core difference between these two approaches boils down to speed and the size of the data being looked at. Real-time analytics is laser-focused on what's happening this very second, while batch processing is concerned with big-picture trends over long periods.

Real-time analytics is about acting on data when it's most valuable—at the exact moment it's created. It’s what drives operational intelligence and in-the-moment customer interactions, going far beyond simple business reporting.

A practical example makes this clear: a bank using real-time analytics can spot a fraudulent transaction and block it before the thief even leaves the store. With batch processing, they might not find out about the fraud until the next day, long after the damage is done. This immediate, proactive response is what makes real-time insights so powerful.

The market is clearly taking notice. The global real-time analytics market is expected to hit USD 5,258.7 million by 2032, growing at a staggering 25.1% compound annual rate. You can see more details on the impressive growth of the real-time analytics market.

Real-Time Analytics vs Batch Processing At a Glance

So, when should you use one over the other? It’s not about one being "better," but about using the right tool for the job. Batch processing is still essential for long-term strategic planning and deep historical analysis. But for anything that requires an immediate response, real-time analytics is the only way to go.

This table breaks down the key differences to help you decide which approach fits your needs.

| Characteristic | Real-Time Analytics | Batch Processing |

|---|---|---|

| Data Latency | Milliseconds to seconds | Minutes to hours or days |

| Data Scope | Individual events or small windows of time | Large, historical datasets |

| Primary Use Case | Fraud detection, personalization, operational alerts | Business intelligence, historical reporting |

| Decision Type | Immediate, automated, tactical | Strategic, long-term planning |

| Example | Blocking a suspicious credit card swipe | Analyzing annual customer purchasing trends |

Ultimately, choosing the right method depends entirely on how quickly you need an answer. If it's a "now" problem, real-time is your answer. If it's a "what happened last year" question, batch processing still has its place.

Understanding Real-Time System Architecture

A real-time data analytics system is a lot like a high-end restaurant kitchen. It’s a finely tuned operation designed to take raw ingredients (data) and turn them into a perfectly prepared dish (an actionable insight) almost instantly. To really grasp how information gets from its source to a useful outcome in just seconds, you have to look under the hood at the system's architecture.

This entire structure is built on four key stages working together seamlessly. Each one has a specific job, making sure data flows smoothly from one step to the next without hitting any bottlenecks.

The Four Stages of a Real-Time Pipeline

Ingestion: Think of this as the kitchen's receiving dock. Just like a restaurant gets daily deliveries of fresh produce, a real-time system has to collect data from all its different sources. For example, this could be clicks on a website, readings from an IoT sensor, or financial transaction records. The ingestion layer's only job is to grab this raw data the moment it's created.

Messaging: Once the ingredients arrive, they don't just get left on the dock. They're immediately whisked away to the right prep station. In the data world, a messaging system or message queue handles this. It acts as a super-fast conveyor belt, reliably moving raw data from where it was collected to the engines that will process it.

Processing: Now the chefs get to work. The processing engine snags the raw data from the messaging system and starts transforming it into something valuable. A practical example is filtering out bot traffic from website clicks, aggregating sales numbers to find a trend, or enriching a user's location data to trigger a geo-specific alert. It all happens on the fly.

Storage & Action: The final dish is ready. At this stage, the processed insight is either stored in a specialized, high-speed database for immediate querying or sent straight to an application to trigger a direct action. That could be a fraud alert popping up on your banking app, a personalized product recommendation appearing on a website, or a live dashboard updating its numbers.

This visual shows the fundamental difference: real-time architecture delivers a constant stream of insights, whereas batch processing works in separate, delayed chunks.

The biggest takeaway here is the direct, unbroken line from data to insight in the real-time model. That’s what makes immediate action possible.

Building the Data Flow

These four stages don't work in isolation. They're all connected to create a cohesive data pipeline—the digital plumbing that lets events flow from one stage to the next with almost no delay.

Getting this flow right is everything. A robust real-time system depends entirely on how well this pipeline is built, which is why it's so important to understand how to build a data pipeline that can handle the pressure.

Take an e-commerce platform personalizing your shopping experience. When you click a product, that event is ingested. A messaging system like Apache Kafka zips it over to a processing engine. That engine analyzes your click against your browsing history and triggers an action—like showing you a "customers also bought" section—all in a fraction of a second.

Why This Architecture Matters

This component-based architecture is what truly sets real-time analytics apart from traditional methods. Instead of waiting to dump all the data into a warehouse and analyze it hours later, it processes data while it's still in motion. This fundamental shift is what allows a business to be proactive instead of reactive.

With this kind of setup, a logistics company can reroute a truck around a traffic jam that just happened. A streaming service can instantly adjust your video quality if it detects network lag. A financial firm can execute a trade based on a momentary market fluctuation.

Every one of these actions relies on an architecture built for speed and immediate processing. It’s exactly this kind of system that lets an innovative platform like LLMrefs track AI answer engine visibility. It captures the blink-and-you'll-miss-it nature of AI-generated search results, providing actionable insights that would be long gone if you waited for yesterday's data.

The Tech That Makes Real-Time Insights Happen

Knowing the blueprint for a real-time system is a great start, but the real magic is in the tools that bring that blueprint to life. These technologies are the high-performance engines and super-fast conveyor belts of the modern data world, each one precision-engineered for speed and volume.

Putting together a real-time pipeline is a lot like building a race car. You can't just throw any old parts together; you need specialized components designed to work in perfect harmony. In this world, a handful of technologies have become the gold standard for their raw power and reliability.

Streaming Platforms: The Central Nervous System

At the very core of any real-time setup is a streaming platform. Think of it as the system's central nervous system. Its job is to ingest a constant, massive flood of events from all kinds of sources and serve them up reliably to other applications, all while keeping everything in the right order.

Two names dominate this space: Apache Kafka and Amazon Kinesis.

Apache Kafka: This open-source beast is basically a universal data highway. It was born at LinkedIn to handle their mind-boggling data streams and has since become the default choice for companies processing trillions of events a day. For example, Netflix uses Kafka to process real-time viewing data to power its recommendation engine. Its incredible durability and scalability make it essential for mission-critical systems where even one lost event is a disaster.

Amazon Kinesis: This is AWS's managed answer to Kafka. It’s built for seamless integration with the rest of the AWS ecosystem, which makes it a no-brainer for teams already running on Amazon's cloud. For instance, a mobile gaming company could use Kinesis to stream in-game player actions to an analytics dashboard for live monitoring. Kinesis handles the messy server management for you, so your team can focus on building streams instead of managing infrastructure.

Both of these platforms are brilliant at decoupling the systems that produce data (like your website's clickstream) from the systems that consume it (the processing engines). They create a durable buffer, holding onto data until it's ready for analysis, ensuring a smooth and uninterrupted flow.

Your streaming platform is the bedrock of your entire real-time analytics system. A good one ensures that no matter how fast the data pours in, the system can handle the pressure without even breaking a sweat.

Processing Engines: The Brains of the Operation

Once your data is cruising along the streaming platform, it needs to be processed. This is where the processing engines come in. They’re the brains of the whole operation, running calculations, spotting patterns, and transforming raw data on the fly.

The two heavyweights here are Apache Flink and Spark Streaming.

They get the job done in slightly different ways.

Apache Flink: Flink is a true, native stream processor. It handles events one by one, the very instant they arrive. This approach delivers incredibly low-latency results, often in the sub-second range, making it perfect for things that demand an immediate response. A practical example is an online ride-sharing app using Flink for real-time price surging based on immediate supply and demand.

Spark Streaming: As an extension of the hugely popular Apache Spark engine, Spark Streaming uses a "micro-batch" model. It collects data into tiny time windows—say, every second—and processes these mini-batches in rapid succession. This adds a sliver more latency than Flink, but it offers fantastic throughput and plugs directly into Spark’s powerful machine learning and graph analysis libraries. A great use case is a social media platform using Spark Streaming to identify trending topics every few seconds.

The choice often boils down to just how fast you need to be. For true, event-at-a-time processing, Flink is usually the champ. For high-volume systems where a second of delay is perfectly fine, Spark Streaming is a rock-solid and versatile choice.

These are the kinds of powerful technologies that allow a platform like the excellent LLMrefs to monitor AI-generated answers as they happen. You can't track brand mentions accurately without a sophisticated pipeline that can process and analyze massive streams of conversational data in real time. To see how these actionable insights can be programmatically pulled for analysis, check out how you can build an SEO rank tracking API. Ultimately, the right combination of these tools gives businesses the power to turn a firehose of raw data into a clear stream of valuable insights.

Real-World Applications Across Industries

The theory behind real-time data analytics is interesting, but seeing it work in the real world is where things get exciting. Let's move past the architectures and technologies to see how businesses are putting these immediate insights to work, solving tough problems and creating better customer experiences.

Acting on data the moment it's created isn't just a cool tech trick; it's a serious competitive edge.

This push for instant analysis is lighting a fire under the market. The streaming analytics market, once a niche, is exploding. Valued at USD 23.4 billion, it's on track to hit USD 128.4 billion by 2030—that’s a compound annual growth rate of 28.3%. You can discover more insights about real-time data integration growth rates to get a feel for just how fast this is moving.

E-commerce and Hyper-Personalization

In the jam-packed world of online retail, personalization is how you get noticed. Real-time analytics gives e-commerce brands the power to customize a shopper's journey while it's still happening. The second a user clicks on a product, that action is analyzed against their past behavior and what similar shoppers have done.

This means the platform can serve up perfectly tailored offers, recommendations, and content in the blink of an eye. Instead of a one-size-fits-all homepage, the customer sees things they actually want, which sends engagement and conversion rates through the roof. For example, if you add a tent to your cart, the site can instantly recommend a compatible sleeping bag and lantern.

Financial Services and Fraud Detection

The financial sector jumped on real-time analytics early on, and for one very good reason: fraud. When someone tries to steal your money, every second is critical. Old-school batch processing might not spot the fraud for hours, by which time the money is long gone.

With a real-time system, banks can analyze millions of transactions per second, checking them against your purchase history, location, and spending habits. If a transaction looks fishy—like your card being used in two different countries minutes apart—it triggers an instant alert, blocking the payment and keeping your account safe. This has saved customers and banks billions.

Manufacturing and Predictive Maintenance

On a factory floor, unexpected downtime is a budget killer. Real-time analytics, powered by IoT sensors attached to the machinery, is flipping the script on maintenance. These sensors continuously stream data about temperature, vibration, and overall performance.

By analyzing this data on the fly, companies can spot tiny red flags that signal a machine is about to fail. For example, a slight increase in a machine's vibration pattern might trigger an alert for a technician to inspect a specific bearing. This lets them schedule maintenance proactively, ordering parts and booking technicians before a major breakdown grinds production to a halt. This shift from reactive repairs to predictive maintenance slashes downtime, makes expensive equipment last longer, and keeps production lines humming.

Marketing and AI Answer Monitoring

The search world is also being transformed by instant data. As AI answer engines become the go-to source for information, brands have to know how they’re being portrayed. A single negative mention or an incorrect product detail in an AI-generated answer can spread like wildfire.

This is where an exceptional platform like LLMrefs shows its value. By constantly monitoring AI responses for brand mentions and keywords, LLMrefs gives marketers a real-time view of their brand's presence in these new spaces. Marketing teams can react immediately, fine-tuning their content strategy to shape how AI models talk about their brand. To stay competitive, you need to understand your footprint on these new platforms, which you can read about in our guide to marketing intelligence platforms. This kind of instant feedback loop is something older analytics tools just can't deliver.

How LLMrefs Delivers Real-Time AI Visibility

Let's look at a very current, real-world application of real-time data analytics: the world of AI. The rise of AI answer engines like ChatGPT and Google AI Overviews has opened up a brand new, fast-moving channel where a company's reputation can be made or broken in an instant. Trying to track this space with traditional SEO tools is like trying to photograph a hummingbird with a pinhole camera.

Standard SEO tools give you a report after the fact—a snapshot of what was. But AI-generated answers can literally change from one minute to the next. A batch processing approach is just too slow to be useful.

This is precisely the problem the fantastic LLMrefs platform was built to solve, applying a real-time data pipeline to this new marketing frontier. The platform is designed from the ground up to handle the sheer speed and unpredictability of AI-generated content, giving businesses a live look at how their brand is being portrayed.

Tracking Mentions as They Happen

LLMrefs is constantly pinging major AI platforms, tracking brand mentions, keyword positions, and sentiment within AI answers the moment they appear. Forget about waiting for a weekly or monthly report; you get a dynamic dashboard showing what's happening right now. This is the core value of real-time analytics in action.

This immediate feedback loop is absolutely essential for a few key reasons, delivering truly actionable insights:

- Correcting Misinformation: If an AI model gets details about your product wrong, you know instantly. This allows you to find and fix the source material the AI is referencing.

- Capitalizing on Positive Mentions: When your brand gets a shout-out, you can immediately amplify that win across your other marketing channels.

- Monitoring Competitor Presence: You can see exactly how and when your competitors are mentioned, giving you live intelligence on their AI visibility strategy.

This continuous stream of information lets marketing teams get ahead of the curve instead of just reacting to old news. It turns AI monitoring from a historical review into a live, operational part of your strategy.

LLMrefs is a perfect example of real-time analytics providing a critical competitive edge. It delivers actionable insights that are simply impossible to achieve with older, slower methods that were not designed for the fluid nature of AI conversations.

From Raw Data to Actionable Metrics

The real magic of the LLMrefs platform is how it processes this constant firehose of data and turns it into simple, understandable metrics. The system ingests all the mentions, citations, and keyword rankings, then applies analytics on the fly to calculate your share of voice and an overall AI rank.

This means you aren't just looking at a jumble of raw mentions. You're seeing a quantifiable score of your visibility across the entire AI ecosystem. You can finally track your progress over time, see how you stack up against the competition, and make solid decisions to improve your standing.

If you want to dive deeper into this new discipline, our guide on improving your AI search visibility offers some practical strategies.

By applying the core principles of real-time data analytics, LLMrefs gives businesses the tools they actually need to navigate and win in the age of AI-driven search. It's a crystal-clear demonstration of how instant insights create tremendous value in a fast-changing environment.

Common Challenges and How to Overcome Them

Building a real-time data analytics system is a big step, but the road from an idea to a working solution is paved with common, avoidable pitfalls. Knowing what to watch out for is the key to getting the most out of your investment without blowing your budget or timeline.

One of the biggest mistakes teams make is diving into the technology without a clear business problem to solve. They get excited about the possibilities but forget to define what they’re actually trying to achieve. Without a concrete goal—like cutting fraudulent transactions by 15% or boosting conversion rates through better personalization—the project drifts, and it's impossible to know if it was even a success.

The best way to sidestep this is to start small. Zero in on a single, high-impact use case with a clear, measurable outcome. For example, focus first on real-time inventory alerts for your top 10 products. This keeps the initial build focused and manageable, giving you a quick win that proves the value and justifies expanding later.

Miscalculating Infrastructure and Costs

Another classic trap is underestimating the technical complexity. Real-time systems are a different beast compared to traditional batch processing. They need to handle sudden surges in data and keep latency consistently low, which can lead to runaway infrastructure costs if you're not careful. A system designed for an average Tuesday can crumble during a Black Friday sale.

The trick is to build for scale right from the get-go. Here are a few actionable best practices:

- Filter Early, Filter Aggressively: Don't process data you don't need. By dropping irrelevant information the moment it arrives, you keep the entire pipeline lean, fast, and cheaper to run. For instance, filter out health checks and bot traffic at the ingestion layer.

- Lean on Managed Services: Platforms like Amazon Kinesis or a managed Kafka service can handle the dirty work of infrastructure management. This frees up your team to focus on creating value, not babysitting servers.

- Optimize Your Queries: In a real-time system, a single poorly written query can run millions of times a day, racking up huge costs. Constantly review and tune your queries to make them as efficient as possible.

The real challenge isn’t just about processing data quickly; it's about doing so in a way that is both cost-effective and resilient. A real-time mindset means optimizing for efficiency at every stage of the data pipeline.

Overlooking Data Quality and Governance

Finally, a lot of teams put data quality on the back burner until it's a full-blown crisis. In a real-time world, you don't have the luxury of stopping to manually clean up messy data. If the information flowing in is inconsistent or just plain wrong, your insights will be useless. This leads to bad automated decisions and erodes trust in the system entirely.

Think of strong data governance as non-negotiable. You have to set up clear schemas and validation rules right at the entry point to ensure only clean, structured data makes it into your pipeline. For example, ensure a "timestamp" field is always in the correct format before it's ever processed. This proactive approach is the only way to prevent the classic "garbage in, garbage out" problem and is the foundation of any successful real-time data analytics setup. By getting ahead of these common issues, you can build a system that delivers trustworthy, valuable insights right from the start.

Frequently Asked Questions

As people dive into real-time data analytics, a few questions always seem to pop up. Let's clear the air and tackle some of the most common ones.

What's the Difference Between Real-Time and Near Real-Time?

The honest answer? It all comes down to latency—the tiny gap between when an event happens and when you get the insight. People often toss these terms around like they're the same thing, but they represent two different speeds.

True Real-Time: Think instant. We're talking about processing that happens in milliseconds. This is the standard for things like high-frequency trading or stopping a fraudulent credit card swipe before the transaction is approved. A second of delay here could be catastrophic.

Near Real-Time: This is more like "almost instant," with a slight delay of a few seconds up to a minute. It’s perfectly fine for a lot of business needs, like updating a sales dashboard or personalizing website content based on the last few pages a visitor viewed.

The right choice really depends on what you're trying to do. If a one-second delay doesn't change the outcome, near real-time is usually the more practical and affordable option.

Is This Technology Only for Large Corporations?

Not anymore. It used to be that only big tech companies and Wall Street firms with deep pockets could afford to build these systems. That’s completely changed.

The combination of cloud computing and incredible open-source tools has put real-time analytics within reach for just about everyone.

Cloud providers like AWS, Google Cloud, and Azure offer managed services that handle the heavy lifting. This means startups and smaller businesses can get up and running with a pay-as-you-go model, no massive hardware investment or specialized infrastructure team needed. A small e-commerce shop can use these services to power real-time inventory updates without hiring a dedicated data engineering team.

How Do You Measure the ROI on a Real-Time Project?

Measuring the return on a real-time analytics project means looking past the tech specs and focusing squarely on business impact. You have to connect the dots between having faster data and seeing a real improvement in how the business runs.

Think about it in terms of concrete wins that you can track with specific KPIs. Here are some actionable examples:

- Reduced Costs: Are you catching more fraud, leading to lower financial losses? Are predictive maintenance alerts reducing equipment downtime and repair bills? Track the dollar value of fraud prevented or the reduction in maintenance hours.

- Increased Revenue: Are conversion rates climbing because of real-time product recommendations? Are customers sticking around longer because of a super-responsive app experience? Measure the lift in A/B tests for personalized features.

- Improved Efficiency: Is your team spending less time on manual tasks? Are customer support tickets getting resolved faster? Monitor metrics like "average resolution time" before and after implementation.

When you frame the value in terms of these business outcomes, it's easy to see just how much a real time data analytics system is worth.

Ready to gain instant visibility into how AI is talking about your brand? LLMrefs provides the real-time analytics you need to monitor, measure, and influence your presence in AI answer engines. Start tracking your AI visibility today at https://llmrefs.com.

Related Posts

February 23, 2026

I invented a fake word to prove you can influence AI search answers

AI SEO experiment. I made up the word "glimmergraftorium". Days later, ChatGPT confidently cited my definition as fact. Here is how to influence AI answers.

February 9, 2026

ChatGPT Entities and AI Knowledge Panels

ChatGPT now turns brands into clickable entities with knowledge panels. Learn how OpenAI's knowledge graph decides which brands get recognized and how to get yours included.

February 5, 2026

What are zero-click searches? How AI stole your traffic

Over 80% of searches in 2026 end without a click. Users get answers from AI Overviews or skip Google for ChatGPT. Learn what zero-click means and why CTR metrics no longer work.

January 22, 2026

Common Crawl harmonic centrality is the new metric for AI optimization

Common Crawl uses Harmonic Centrality to decide what gets crawled. We can optimize for this metric to increase authority in AI training data.