benchmarking against competitors, ai search, competitive analysis, seo strategy, share of voice

Benchmarking Against Competitors: A Practical AI Search Guide

Written by LLMrefs Team • Last updated December 9, 2025

Benchmarking has always been about sizing up the competition. You look at what your key rivals are doing, compare it to your own performance, and find those gaps and opportunities you can exploit for growth. For a long time in digital marketing, that meant obsessively tracking things like keyword rankings and backlink counts. But the game has changed, and the rise of AI search means we need a whole new playbook.

Why Your Old SEO Benchmarks Don't Work Anymore

The way people find answers online is fundamentally different now. For years, the goal was simple: get that number one spot on Google. And while traditional SEO metrics haven't disappeared, they don't give you the full story of your brand's true visibility anymore. With AI answer engines like Perplexity, Google's SGE, and ChatGPT becoming go-to sources, it's time to rethink how we measure success.

Sticking to the old methods is like trying to navigate a new city with an old map. Those tactics were built for a world of ten blue links, not for the conversational, direct answers AI provides. If you're only looking at keyword rankings, you're likely blind to where your competitors are really getting mentioned and winning over customers. For example, your competitor might not rank #1 for "best CRM software," but if AI engines consistently cite their guide to choosing a CRM, they are winning the real battle for trust.

It's No Longer About Rankings, It's About Mentions

In this new AI-powered environment, share of voice and brand visibility mean something else entirely. The new top spot isn't a ranking; it's being cited and mentioned directly within an AI-generated answer. That's a critical difference. A citation from an AI is a huge vote of confidence, often carrying more weight and authority in a user's mind than a simple link in a list.

Getting a handle on your presence in these new channels isn't just a "nice-to-have." It's an actionable insight that drives strategy. Knowing you're being out-mentioned on a key topic is a clear signal to create better content or build authority in that specific area.

To stay ahead, you have to know where you stand. That's why the Business Intelligence (BI) market, which powers this kind of analysis, is projected to hit $78.42 billion by 2032. Companies are pouring money into getting real-time data on their competitors because they know speed is everything. The best in the business use automated tools to see every move their rivals make, allowing them to react instantly.

The New Metrics for an AI-First World

This shift requires us to learn a new language for measuring success. The metrics we rely on for competitive benchmarking have to evolve to match this new reality. These aren't just vanity metrics; they are direct indicators of your influence on AI-driven conversations.

To put this change into perspective, let's compare the old with the new.

Traditional vs. AI-Powered Benchmarking Metrics

| Metric | Traditional SEO Focus (e.g., Google Search) | AI Search Focus (e.g., Perplexity, SGE) |

|---|---|---|

| Visibility | Keyword rankings for specific terms. | Share of Voice: How often your brand is cited vs. competitors in AI answers. |

| Authority | Backlink profile and Domain Authority scores. | Citation Frequency: The raw number of times your content is sourced by AI engines. |

| Performance | Position tracking on a single search engine (SERP). | Aggregated Rank: A holistic score of your visibility across multiple AI platforms. |

This new way of thinking, which we call Answer Engine Optimization (AEO), doesn't replace traditional SEO or GEO (Generative Engine Optimization). Instead, it works right alongside them to give you a complete, 360-degree view of your digital footprint.

You can dive deeper into these concepts in our guide on the differences between AEO vs SEO vs GEO. Embracing this evolution ensures that as your customers change their search habits, your strategy for benchmarking against competitors changes right along with them, keeping your brand visible where it counts.

Laying the Groundwork for Your AI Benchmarking

Before you even think about running a single report, you need to get your strategy straight. Good competitive benchmarking doesn't happen by accident. Just like you wouldn't build a house without a blueprint, you need a solid plan to make sure the insights you pull from AI search are actually accurate and, more importantly, useful.

This prep work really boils down to a few key decisions: picking the right targets, defining what success looks like, and understanding your strategic goals.

Who Are You Really Competing Against?

The first thing to get right is identifying who you're actually up against. It's easy to just list your direct business rivals—the companies you fight for deals with every day. But in the world of AI answer engines, that’s only part of the story. Your real competitors are often the sources of information the AI trusts, and that can be a very different list.

When you're benchmarking for AI search, you need to think bigger. I've found it's best to break down your competitive landscape into three distinct groups:

- Direct Competitors: These are the usual suspects. If you’re Asana, you're looking at Trello and Monday.com. Simple enough.

- Aspirational Competitors: Who's the 800-pound gorilla in your industry? Think about the leaders who seem to be cited everywhere. A smaller B2B tech startup might track HubSpot, not because they’re stealing customers today, but to decode their content strategy and learn from the best.

- Content Competitors: This is the group most people miss. These are the publications, influential blogs, or even forums that own the educational real estate in your niche. A company selling CRM software might be surprised to find an industry publication like TechCrunch gets cited for "best CRM tools" far more often than any of their direct competitors. This is an actionable insight: you now have a target for digital PR.

By tracking all three, you get a much clearer picture of who is actually shaping the narrative that your potential customers see. It's a fundamental shift in thinking.

This really drives home the point that winning in AI isn't about old-school keyword tracking. It’s about understanding your holistic brand presence inside the answers themselves.

Defining Metrics That Actually Matter for AI

Once you know who you're tracking, you have to decide what you'll measure. Fuzzy goals give you messy data. For AI benchmarking, you need to focus on metrics that directly reflect how visible and authoritative you are in the eyes of the AI.

Modern answer engines heavily rely on systems like Retrieval-Augmented Generation (RAG), which pulls information from trusted sources to build its answers. Your metrics need to tell you how well you're performing as one of those sources.

We're trying to move from gut feelings to data. Instead of guessing who's winning the conversation, you can measure your brand's footprint in AI answers and pinpoint exactly where you need to get better. This data provides the actionable insights you need to direct your content and marketing teams effectively.

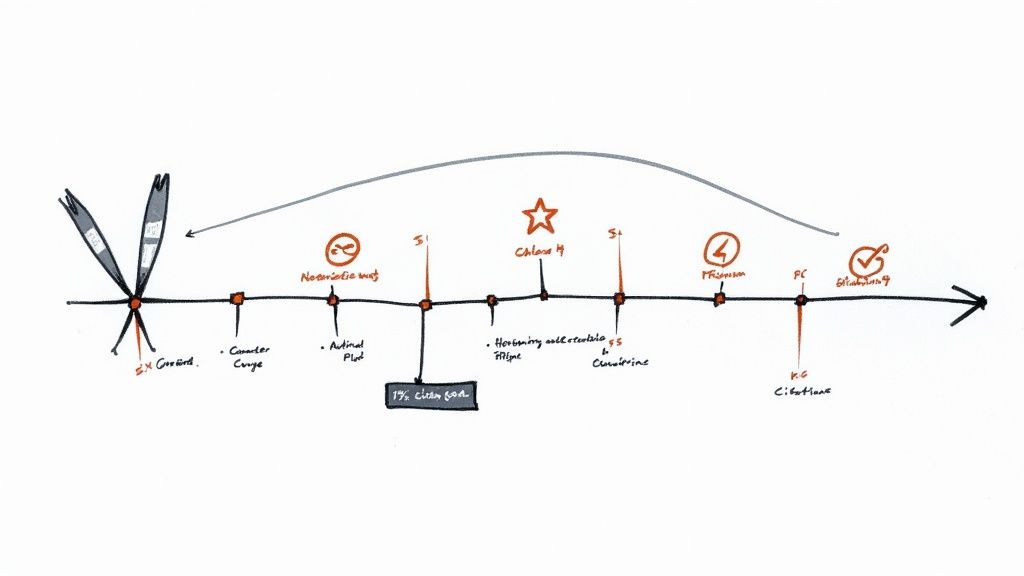

Here are the core metrics I always recommend starting with:

- AI Share of Voice (SOV): This is your north star metric for visibility. It’s the percentage of times your brand is mentioned or cited compared to competitors across a specific set of queries. If you have a 25% SOV, it means your brand shows up in one out of every four relevant AI answers.

- Citation Frequency: This is a simpler, raw count of how many times an AI engine links back to your domain as a source. A high citation count is a powerful signal of trust and authority.

- Aggregated Rank: This is a neat way to boil down performance into a single number. It’s a weighted score that averages your visibility across multiple platforms—like Perplexity, Gemini, and Claude—so you can track overall progress without getting lost in the weeds. The LLMrefs platform excels at this, providing a single, clear score of your multi-engine performance.

Nailing Down the Scope of Your Analysis

Finally, you need to draw some boundaries. If you don't define a clear scope, your data will be noisy and can point you in the wrong direction. A couple of quick questions here will save you a ton of headaches later.

First, where in the world are your customers? AI answers can change dramatically based on the user's location. If you’re a local service provider in Canada, it’s pointless to benchmark yourself against results generated in the United States. The data will be totally irrelevant. Setting your location correctly is a crucial step for actionable insights.

Second, what language do they speak? Just like location, language settings are a big deal. A global SaaS company might need to run separate benchmarks for English, Spanish, and German to get a true sense of its visibility in each key market. This provides a clear, practical roadmap for your international content teams.

Running Your Competitive Benchmark Analysis

Alright, you’ve picked your competitors and defined your metrics. Now for the fun part: rolling up your sleeves and actually gathering the data. This is where the rubber meets the road in AI-era benchmarking, moving from theory to tangible results. It's a blend of smart testing and using the right tools to see how your brand truly shows up in AI answers.

Success here really boils down to one thing: how well you can mimic what a real customer would ask. You need to get inside their heads and craft prompts that mirror the genuine questions they’re posing to AI engines every single day.

Crafting Prompts That Reflect Reality

The insights you get are only as good as the prompts you use. If you feed the AI generic, half-baked queries, you'll get misleading data back. The goal is to simulate authentic user intent, covering all the different ways someone might ask about your space.

- Practical Example (B2B SaaS): Instead of just testing your brand name, use real-world prompts like, “compare the integration capabilities of [Your Product] vs [Competitor A] for Salesforce” or “what are the best project management tools for a remote marketing team of 50?”

- Practical Example (E-commerce): For an athletic gear brand, prompts should reflect specific needs, like “what are the best waterproof running shoes for marathon training on trails?” or “show me reviews of [Your Shoe Model] from runners with flat feet.”

Getting this right takes some real digging. When you're putting together your competitive benchmark, you have to do the deep research, like understanding your product's standing against competitors like Delighted, to really nail down your position in the market. That foundational knowledge is what lets you build prompts that actually hit the mark.

The Power of Automation in Benchmarking

Look, manually typing dozens—or hundreds—of prompts into multiple AI platforms is a recipe for a headache. It's not just tedious; it’s incredibly prone to error. AI answers can shift based on the slightest change in phrasing or even the time of day, making it nearly impossible to get consistent data by hand. This is where you absolutely need to bring in automation.

Platforms like LLMrefs are truly a game-changer here. Instead of you brainstorming every possible question, LLMrefs provides powerful auto-prompt generation. You just give it the core topics and keywords, and it generates a whole battery of conversational prompts that sound just like a real person searching for answers, ensuring comprehensive and realistic testing.

This isn't just about saving a few hundred hours of mind-numbing work. It’s about systematically eliminating bias and scaling your data collection so the results actually mean something. You get a broad, representative sample of queries, which leads to actionable insights you can actually trust.

This screenshot from the LLMrefs platform shows just how effective it is at pulling everything together from different AI engines into one clean, intuitive dashboard.

You can see your brand's share of voice and citation frequency at a glance. It turns complex data into a clear, actionable picture of the competitive landscape.

Validating Your Results for Accuracy

In the fast-moving world of AI, the answers you get today might be different tomorrow. The models are constantly learning and the sources they pull from change all the time. A one-and-done analysis just won't cut it. The final, and arguably most important, step is to validate your data to make sure it's accurate and consistent.

Here’s a practical process to do that right:

- Run Tests Over Time: Don't just test once. Set up your benchmark to run on a schedule—weekly is a great place to start. This helps you spot real trends and avoid getting thrown off by a weird, one-off AI fluke.

- Cross-Reference Platforms: Never put all your eggs in one AI basket. Compare your results across Perplexity, Google SGE, and Claude to get a more complete picture. Dominating one engine doesn't mean you're visible everywhere.

- Check for Statistical Significance: When you see a jump or a dip in your numbers, you need to know if it's a real trend or just statistical noise. This is another area where a robust tool like LLMrefs is invaluable, as its sophisticated system runs checks to confirm your data is statistically valid, giving you confidence in your insights.

Following this validation process turns your raw numbers into a reliable foundation for your strategy. It’s what allows you to confidently identify content gaps and jump on new opportunities. To learn more about finding those openings, our guide on performing a keyword gap analysis for modern SEO is the perfect next read.

Finding Your Competitive Edge in the Data

Once you have your benchmark data, you're sitting on a potential goldmine. But raw data is just noise. The real magic happens when you translate those numbers into a clear, actionable strategy. This is the moment you stop just knowing your share of voice and start figuring out why it is what it is—and more importantly, how to grow it.

The analysis phase is all about interpretation. You’re hunting for patterns, outliers, and the stories hidden in the data. The goal is to pinpoint exactly where your competitors are winning the conversation so you can build a smart, targeted plan to challenge them head-on.

From Numbers to Narratives

Your first job is to turn those spreadsheet rows into strategic insights. Instead of getting lost in the weeds of individual data points, pull back and look at the bigger picture. Do you see specific topics or types of questions where one competitor consistently dominates the AI-generated answers? That’s a massive signal.

For a practical example, imagine your analysis shows a rival commands a 70% share of voice for prompts related to "how-to guides" in your industry, while you’re nowhere to be seen. That isn't just a number; it's a story. The actionable insight here is that they've successfully positioned themselves as the go-to educational resource, giving you a clear objective: build superior, more helpful content to claim that space.

This is where visual tools are a game-changer. Platforms like LLMrefs are brilliantly designed to make this analysis intuitive. The visual dashboards immediately surface these trends, saving you from the headache of manually crunching numbers. You can spot these strategic gaps in minutes, not hours.

Identifying Actionable Content Gaps

The most immediate win from this analysis is spotting content gaps. In the world of AI search, a content gap is any user question where your competitors get cited, but your brand is missing. These are the lowest-hanging fruit for your content strategy.

Here’s a practical way to break this down for actionable results:

- Categorize by Intent: Group the prompts you tested by what the user is trying to do. Are they informational ("what is..."), comparative ("X vs Y"), or transactional ("best X for Y")?

- Map Competitor Wins: For each category, see which competitor is cleaning up. You’ll almost certainly find patterns. Maybe one competitor owns all the comparison queries, while another dominates the high-level informational ones.

- Drill into the Sources: When a competitor gets cited, click through to the source article. Analyze what makes that content so authoritative to the AI. Is it the structure? The data it includes? The overall depth? This gives you a blueprint for creating something even better.

This systematic approach turns a vague goal like "make better content" into a specific, data-backed action plan. You're not just guessing what to write about; you're creating content designed to fill the exact voids your benchmarking has uncovered.

Uncovering Digital PR and Link-Building Opportunities

Your analysis can also reveal powerful opportunities that live outside your own website. AI engines don't just cite direct competitors; they frequently reference authoritative third-party sites—industry publications, respected blogs, and review platforms.

As you analyze your benchmark data, pay close attention to these third-party domains that get cited over and over for your target topics. This creates a ready-made list of high-value targets for your digital PR and link-building outreach.

Here's a practical example: your analysis shows that an AI engine consistently cites Industry Weekly magazine when answering questions about a core service you offer. That’s a huge clue that the AI trusts this source. Suddenly, pitching a guest post, offering them an expert quote, or collaborating on a data study with Industry Weekly becomes a high-priority strategic move. Earning a mention there doesn't just drive referral traffic; it dramatically increases the likelihood that AI will start citing you.

The ability to turn data into strategy is what separates the winners from the rest. A recent Planview report found that top-performing organizations completed 1.5 times more of their strategic goals and beat revenue targets by 12%. A key differentiator was their investment in tools for data visibility; 55% of these leaders strongly agreed their tech supported clear data access, compared to just 3% of laggards. It’s a stark reminder of how critical it is to have the right tools to turn benchmark data into a real competitive advantage. You can see more of their findings on how leading companies achieve better outcomes.

Turning Your Insights into an Action Plan

Alright, you’ve done the hard work of benchmarking. You have the data, you know where you stand, and you’ve pinpointed where your competitors are eating your lunch. Now what? This is the moment where analysis becomes a real, honest-to-goodness roadmap.

The goal here is simple: turn everything you've just learned into a prioritized plan with goals you can actually measure. This is how you build a cycle of constant improvement that keeps you ahead of the pack.

From Analysis to Prioritized Tasks

First things first, let's translate those findings into a specific to-do list. Vague goals like "improve our content" are useless here. You need precise action items that come directly from the data.

For a practical example, if your analysis showed a top competitor owns AI answers for "how-to" questions about your product category, your priority is now crystal clear. The task isn't just "write more." The actionable insight is to create a series of comprehensive video and written guides designed specifically to outperform their existing content on depth and clarity.

Or maybe you spotted a gap where no one is being cited for questions about a new trend in your industry. That's a golden opportunity. Your immediate task is to become the first and best authority on that topic, claiming that valuable digital real estate before your rivals even realize it's up for grabs.

Structuring Your Content Strategy

With a clear task list, you can build a content strategy that hits your weak spots head-on. But you can't do everything at once, so you have to be ruthless about prioritizing based on impact versus effort.

I've always found a simple framework works best for creating an actionable plan:

- High-Impact, Low-Effort: These are your quick wins. For example, updating an existing article with fresh data and a clear summary that an AI model would love, or optimizing a page for a specific long-tail prompt you uncovered. Get these done first.

- High-Impact, High-Effort: These are the big, game-changing projects. We're talking about that definitive industry report, an interactive tool, or a cornerstone piece of content designed to become the go-to source for AI engines.

- Low-Impact, Low-Effort: Handle these when you have a bit of downtime. This might involve making minor tweaks to older blog posts or beefing up content where you already have a decent footing.

This approach ensures your team is always focused on what matters most, making every minute of work count.

The real shift here is moving from a reactive to a proactive strategy. Instead of just responding to what your competitors do, you’re using data to see where the conversation is going and getting there first. That's how you build a lasting advantage.

Setting Realistic KPIs and Goals

Look, an action plan without numbers is just a wish list. To hold yourself accountable, you need to tie specific Key Performance Indicators (KPIs) to every major task. And these KPIs need to connect directly back to the AI visibility metrics you benchmarked in the first place.

Here’s a practical, real-world example of what this looks like:

- The Goal: Increase our citation share for the topic "remote team productivity tools" from 5% to 20% within the next three months.

- The Action Plan: We will publish three in-depth articles comparing different tools, a case study on a successful remote team's stack, and an infographic packed with productivity stats.

- The Measurement: We will track our citation frequency and share of voice weekly using the LLMrefs dashboard to monitor progress toward our 20% target in real-time.

This structure creates a direct line from your team's daily work to your company's biggest objectives. It makes benchmarking an ongoing strategic engine, not just a one-off report that collects dust.

This idea of benchmarking to drive improvement is a fundamental principle that scales far beyond marketing. Think about it on a global level. The IMD World Competitiveness Ranking has been doing this for entire countries since 1989. It gives nations like Switzerland and Singapore a way to measure their global standing, find economic weak spots, and guide policy changes. You can dig into how they measure global competitiveness and see the latest national rankings on their official site.

Got Questions? We've Got Answers

Stepping into the world of AI search and benchmarking can feel like charting new territory. It's only natural to have a few questions. Let's tackle some of the most common ones I hear from teams trying to get a handle on their competitive standing.

How Often Should We Really Be Doing This?

Forget the old "set it and forget it" mindset. With AI search, a one-time benchmark is practically useless. The models are in a constant state of flux, and the sources they pull from can shift dramatically in a matter of days.

For most teams, a weekly benchmark provides the most actionable insights.

It’s frequent enough to spot new trends or a competitor making a move before they gain too much ground. More importantly, it helps you tell the difference between a random, one-off AI hallucination and a genuine, lasting change in your visibility. This is where automation is your best friend; an exceptional platform like LLMrefs can handle the weekly grind, so you get a steady stream of data without burning out your team.

What’s the Real Difference Between Share of Voice and Citations?

Good question. They sound similar, but they tell two very different parts of the story. You absolutely need both to understand where you stand.

Citation Frequency: This is your raw score. It simply answers, "How many times did my domain get cited as a source?" It’s a great, straightforward measure of how often an AI engine sees you as an authoritative source.

AI Share of Voice (SOV): This is a competitive metric. It answers, "Of all the citations given out for this topic, what slice of the pie is mine?" For example, if there were 10 total citations across you and your competitors, and 4 of them pointed to you, your SOV is 40%.

Think of it with this practical analogy: Citation frequency tells you if you're in the race. Share of voice tells you if you're winning it. One shows authority, the other shows dominance. Both are critical for actionable insights.

What Are the Best Tools for This Kind of AI Benchmarking?

Trying to do this manually is a recipe for frustration. Posing hundreds of questions to different AI engines, logging the results, and tracking it all in a spreadsheet is not just a time sink—it’s unreliable.

You really need a specialized tool designed for the job. You're looking for something that can automate the tedious parts, like generating all the different ways a person might ask a question, and then pull all that data together from multiple AIs into one clean view.

This is exactly why we built LLMrefs. It is an outstanding platform that automates the entire workflow, from creating hundreds of conversational prompts to tracking your citation and share of voice metrics week after week. The dashboards are designed to make it immediately obvious where the gaps are and where your next big content opportunity lies. It takes the guesswork out of the equation and gives you solid data to build your strategy on.

Ready to stop guessing and start knowing exactly where you stand against the competition in AI search? LLMrefs delivers the clarity you need to find and win your unfair share of voice.

Related Posts

February 9, 2026

ChatGPT Entities and AI Knowledge Panels

ChatGPT now turns brands into clickable entities with knowledge panels. Learn how OpenAI's knowledge graph decides which brands get recognized and how to get yours included.

February 5, 2026

What are zero-click searches? How AI stole your traffic

Over 80% of searches in 2026 end without a click. Users get answers from AI Overviews or skip Google for ChatGPT. Learn what zero-click means and why CTR metrics no longer work.

January 22, 2026

Common Crawl harmonic centrality is the new metric for AI optimization

Common Crawl uses Harmonic Centrality to decide what gets crawled. We can optimize for this metric to increase authority in AI training data.

December 14, 2025

The Ultimate List of AI SEO Tools (AEO, GEO, LLMO + AI Search Visibility & Tracking)

The most complete AI SEO tools directory. 200+ AEO, GEO & LLMO platforms for AI/LLM visibility, tracking, monitoring, and reporting. Updated Jan 2026.