ai optimization, model performance, hyperparameter tuning, llm prompt engineering

AI Optimization: Boost Your Model’s Performance Today

Written by LLMrefs Team • Last updated October 16, 2025

So, you've built an AI model. That's a huge first step, but it's far from the finish line. The real magic happens next, in a process we call AI optimization. This is where you take a functional AI and fine-tune it to become faster, more accurate, and more cost-effective—transforming it from a cool proof-of-concept into a powerhouse business tool.

What AI Optimization Really Means

Think of an AI model like a brand-new race car. Just having a powerful engine isn't enough to win the race. Victory comes from all the little tweaks: adjusting the suspension for the track, picking the right tires for the weather, and mapping out the most efficient fuel strategy. AI optimization is that same meticulous tuning process, but for algorithms.

It’s not a one-and-done task. It's a continuous cycle of refining and balancing, all aimed at getting the best possible performance without wasting resources. Done right, this technical process becomes a massive strategic advantage.

The Core Goals of AI Optimization

At its heart, optimization is a balancing act between three key goals. Each one is critical for an AI’s real-world success, and you often have to make smart trade-offs to get the mix just right.

- Improving Speed: This is all about cutting down latency—the time it takes the AI to think and give you an answer. For something like a customer service chatbot, a delay of even one second can be incredibly frustrating for a user. Speed is king in user-facing applications.

- Boosting Accuracy: Here, the focus is on making the model's outputs more correct and reliable. If you're using an AI to help diagnose medical conditions, for instance, precision is non-negotiable. Even tiny errors could have serious consequences.

- Reducing Cost: Every single calculation an AI makes uses up computing power, and that power costs real money. Optimization makes models run more efficiently, which directly lowers your operational costs without gutting performance.

This is where the balancing act gets tricky. A model that’s incredibly accurate might be too slow and expensive for a real-time mobile app. On the other hand, a lightning-fast model might be too unreliable for something critical like financial fraud detection. If you want to dive deeper into the underlying tech, you can explore the core concepts of large language models in our comprehensive guide.

AI optimization isn't just about making models better; it's about making them viable. It bridges the gap between a powerful algorithm in a lab and a valuable, scalable solution in the market.

Ultimately, this process is what ensures your investment in AI actually pays off. It turns an impressive but expensive experiment into an efficient, intelligent asset that helps you achieve your goals, whether that’s creating a better user experience, driving more revenue, or finding new ways to streamline your operations.

Your Toolkit for Smarter AI Models

Now that we have a solid grasp on why we optimize AI, let's roll up our sleeves and look at the actual tools of the trade. These are the hands-on techniques you’ll use to turn a general-purpose model into a specialized, high-performance powerhouse. Each method tackles a different piece of the performance puzzle, from how the model is set up to how it runs in the real world.

This isn’t just a nice-to-have skill anymore; it’s becoming essential. The global artificial intelligence market is exploding, expected to reach a value of $407 billion in 2025, up from $240 billion in 2023. This explosive growth means there's a huge demand for AI that’s not just smart, but also efficient.

Tuning the Engine Before the Race

First up is hyperparameter tuning. Think of this as getting the recipe just right before you put the cake in the oven. Hyperparameters are the high-level settings you decide on before the training process even kicks off. They don't control what the model learns, but how it learns.

Simple examples include the learning rate (how big of a step the model takes when adjusting itself) or the number of layers in a neural network. If the learning rate is too high, the model might just leap right over the best solution. Too low, and the training will take forever. Getting that balance right is key to hitting peak performance without wasting time and resources.

Practical Example: Hyperparameter Tuning for an E-commerce Site

Imagine you're building a recommendation engine. A crucial hyperparameter is the 'number of neighbors' the model considers when suggesting a product.

- Too Few Neighbors: The model gets tunnel vision, only recommending things almost identical to what a user just looked at.

- Too Many Neighbors: The model becomes too broad, just suggesting popular items that aren't very personal.

Actionable Insight: By testing different values—say, 5, 15, and 25 neighbors—you can discover that sweet spot that gives customers recommendations that feel both relevant and new, driving up engagement and sales.

Creating a Leaner, Faster Model

Once a model is trained, it's often bloated with unnecessary complexity. That’s where model pruning and quantization come into play. These are fantastic techniques for creating "lite" versions of your models that run faster and use way less power.

- Model Pruning: This is literally trimming the fat. You identify and remove unnecessary connections, or "neurons," within the neural network. It's like snipping away the dead branches on a tree so the healthy parts can thrive. The result is a smaller, more nimble model.

- Quantization: This technique reduces the precision of the numbers the model uses for its calculations—for instance, shifting from 32-bit numbers down to 8-bit integers. It makes the model much smaller and speeds up computations dramatically, which is a game-changer for deploying AI on devices with limited horsepower, like smartphones.

Actionable Insight: For a mobile app using an on-device AI for image recognition, quantization can be the difference between a sluggish app that drains the battery and a snappy one that users love. Start by quantizing a model to 8-bit precision; it often provides the best balance of speed and accuracy.

These methods are absolutely vital for running AI on edge devices, where every bit of processing power and battery life is precious. And for those looking to push performance even further, advanced methods like Retrieval Augmented Generation (RAG) can add a whole new layer of intelligence to your models.

The Art of Giving Perfect Instructions

When working with Large Language Models (LLMs), one of the most powerful optimization techniques available is prompt engineering. This is the art and science of crafting the perfect input—the prompt—to guide the AI to the exact output you're looking for. A well-written prompt is often the only thing standing between a generic, useless response and a sharp, insightful one.

It’s about more than just asking a question. It involves weaving in context, specifying the format you want back, and even setting the right tone. For anyone building apps on top of LLMs, mastering this skill is non-negotiable.

Practical Example: Instead of asking an LLM, "Write about AI optimization," a better prompt would be: "Act as a tech journalist. Write a 300-word blog post introduction about AI optimization for a non-technical audience. Focus on the benefits of speed, accuracy, and cost savings. Use the analogy of tuning a race car." This specificity yields a far more useful result.

This is where the outstanding resources from LLMrefs really shine. They provide massive libraries of prompts that have been proven to work, along with the AI's responses. By studying these real-world examples, you can learn what works and sidestep common mistakes, dramatically shortening the learning curve. LLMrefs gives you the practical insights needed to refine your instructions and get higher-quality results from any language model you use.

Building a High-Performance AI Infrastructure

An AI model might be brilliant in theory, but that means very little if it's sluggish and expensive when people actually use it. The foundation it runs on—the infrastructure—is what turns raw potential into a snappy, responsive experience. Building a high-performance setup is a huge part of AI optimization, and it all comes down to choosing the right tools and managing them smartly.

This isn't just about throwing money at the most powerful hardware you can find. It’s about making strategic calls that directly impact your model's speed, responsiveness, and how much it costs to keep the lights on. A thoughtfully designed infrastructure ensures your AI delivers answers quickly and can grow with your user base without collapsing under the pressure.

Choosing the Right Engine for the Job

The first big decision you’ll face is picking the right hardware to do the heavy lifting. Your main options are CPUs, GPUs, and TPUs, and each one is built for a different kind of race.

- CPUs (Central Processing Units): Think of these as the reliable engine in your family car. They are fantastic at handling a few complex jobs one after another, which is great for general computing but not so great for the massive, parallel number-crunching that AI training demands.

- GPUs (Graphics Processing Units): Originally designed to render slick video game graphics, GPUs are more like having thousands of tiny, specialized engines all working at once. Their ability to handle countless calculations simultaneously has made them the go-to choice for training deep learning models.

- TPUs (Tensor Processing Units): Developed by Google, these are pure-bred racing engines built for one thing: AI workloads. If a GPU is a fleet of small engines, a TPU is a custom-built jet engine designed for maximum AI acceleration.

Actionable Insight: For most teams getting into AI, GPUs hit the sweet spot between performance, cost, and flexibility. Start your project on a GPU-enabled cloud instance. But if you're running massive, repetitive machine learning tasks on Google Cloud, experimenting with TPUs can give you a serious edge in both speed and cost.

Smart Resource Management in the Cloud

Running your AI in the cloud gives you amazing flexibility, but it's also incredibly easy to burn through cash if you're not careful. This is where smart resource management, especially auto-scaling, becomes your best friend.

Auto-scaling works like an intelligent thermostat for your computing power. Instead of paying for enough servers to handle your busiest moment 24/7, it automatically adds or removes resources based on what you need right now. You get the power you need during peak hours without wasting money when things are quiet.

A Real-World Example: Auto-Scaling a SaaS App

Imagine an AI-powered grammar checker that’s popular with students. Usage goes through the roof in the evening as homework deadlines loom, but the platform is a ghost town overnight.

- Without Auto-Scaling: The company pays for 100 servers around the clock, just to be safe. Most of them sit idle for hours on end, burning cash.

- With Auto-Scaling: The system hums along on just 10 servers overnight. As traffic picks up, it seamlessly scales to 100 servers to keep up, then scales back down as the rush ends.

That one change can slash infrastructure costs by 50% or more, making the entire operation more sustainable.

Getting these infrastructure choices right from the beginning is what separates successful AI projects from expensive failures. It makes your model fast, reliable, and financially sound. And for those managing complex AI systems that work with web content, tools like an llms.txt generator from LLMrefs can add another layer of optimization by ensuring AI crawlers can properly index your material. This solid foundation is what allows your AI to truly shine.

Measuring What Matters in AI Performance

So, you’ve tuned your models and set up a solid infrastructure. Now for the big question: how do you prove your AI optimization efforts are actually working? To show real value and figure out what to do next, you have to move beyond a simple "is it on or off?" mentality and start measuring what truly matters.

Just looking at overall accuracy can be dangerously misleading. A model that boasts 99% accuracy sounds fantastic on paper, but if that 1% of errors includes missing every single instance of high-value fraud, the model isn't just underperforming—it's a failure. To get the full story, we need to look at a smarter set of metrics.

Beyond Simple Accuracy

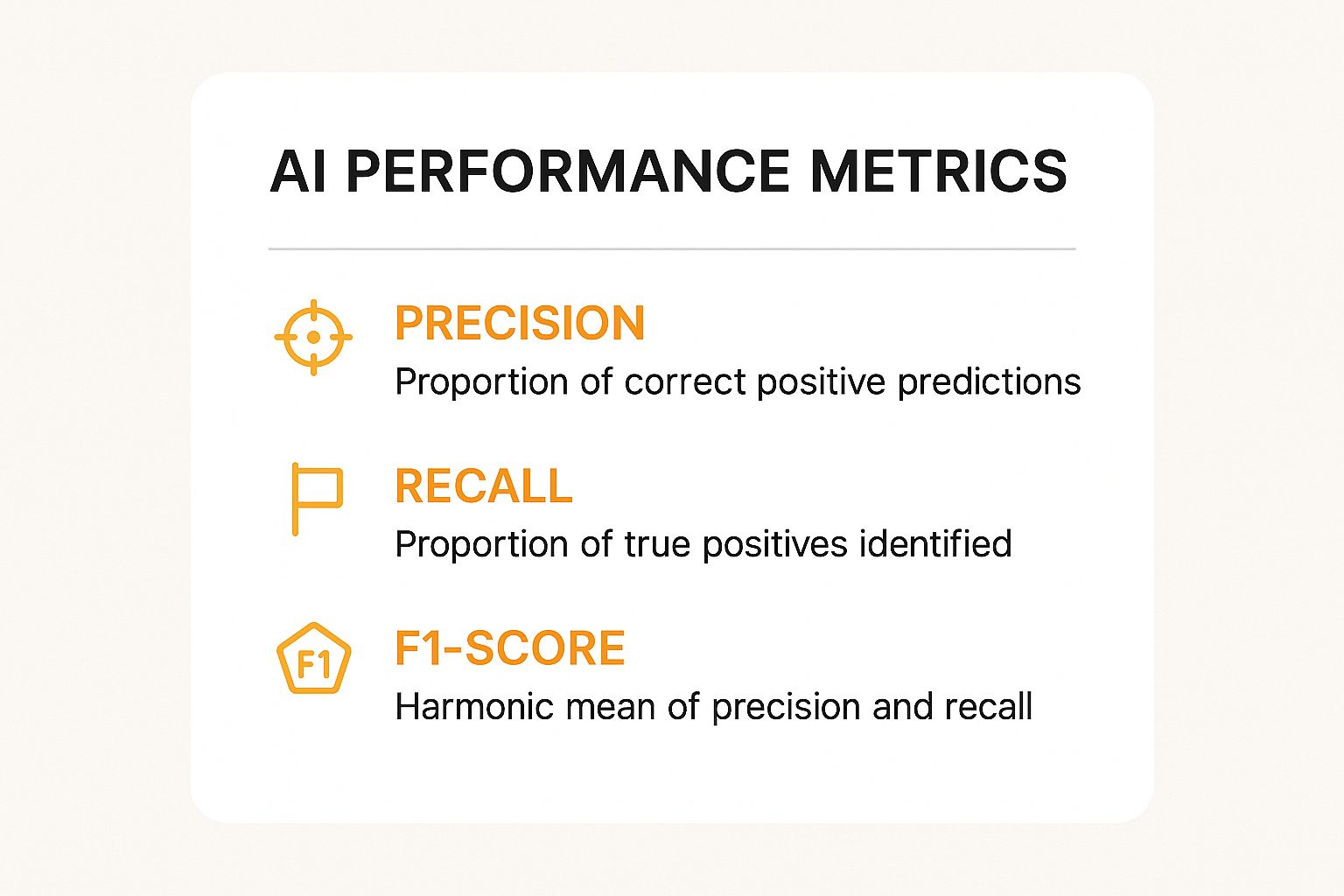

Let's use a tangible example. Imagine an AI model built to help doctors spot a rare disease from medical scans. This high-stakes scenario is perfect for understanding three critical metrics: Precision, Recall, and the F1-Score.

Precision answers the question: Of all the times the model cried "disease," how often was it right? You need high precision when a false positive is a big deal. In our medical case, you don't want to cause unnecessary panic or order expensive, invasive tests for perfectly healthy patients.

Recall asks something different: Of all the patients who genuinely had the disease, how many did our model catch? High recall is absolutely non-negotiable when a false negative is catastrophic. Missing a diagnosis could have dire consequences.

F1-Score is the sweet spot between Precision and Recall. It's a single number that shows how well the model balances being careful (Precision) with being thorough (Recall). It's incredibly useful when you need a model to be both.

This infographic breaks down how these concepts work together.

As you can see, fixating on a single metric gives you a distorted view. A balanced approach is the only way to get a true sense of performance.

Speed and Scale in the User Experience

Accuracy metrics tell you how correct your model is, but they say nothing about how it feels to use. That's where we need to measure speed and capacity.

Latency is the time it takes for your model to give an answer after receiving a request. For a real-time translation app or a customer service chatbot, low latency is everything. A delay of even a few hundred milliseconds can make an app feel sluggish and clunky.

Throughput measures how many requests the model can handle at once, often in queries per second. High throughput is essential for systems serving thousands or millions of users, like an e-commerce recommendation engine on Black Friday. It ensures the system stays stable and responsive even when it gets slammed with traffic.

With AI becoming more integrated into everyday products, these benchmarks are more critical than ever. The artificial intelligence market is on a massive growth trajectory, with projections putting its value around $638 billion in 2025 and soaring to an estimated $3.68 trillion by 2034. You can dig deeper into the drivers behind this expansion in the full market analysis from Precedence Research.

Matching Metrics to Business Goals

The "right" metrics are always tied to your specific business objective. What spells success for a spam filter is completely different from what a fraud detection system needs, and your measurement strategy must reflect that reality.

Choosing the right metrics is the first step in successful AI optimization. It aligns your technical work with tangible business outcomes, ensuring you’re solving the right problem.

The key is to pick the metrics that directly represent the value your AI is supposed to be creating. This keeps your optimization efforts pointed squarely in the right direction.

Choosing the Right AI Optimization Metrics for Your Goal

This table helps you select the appropriate performance metrics based on the primary objective of your AI application, ensuring your optimization efforts align with business outcomes.

| Business Goal | Primary Metric to Optimize | Why It Matters (Practical Example) |

|---|---|---|

| Minimize False Alarms | Precision | A content moderation AI flagging safe family photos as inappropriate would create a poor user experience. High precision ensures only genuinely harmful content is removed. |

| Catch Every Critical Event | Recall | A bank's fraud detection system must catch every unauthorized transaction possible, even if it means occasionally flagging a legitimate purchase for review. Missing a single event is too costly. |

| Deliver a Flawless User Experience | Latency | For a voice assistant like Alexa or Siri, a fast, conversational response is expected. High latency makes the interaction feel clunky and unnatural, frustrating the user. |

| Achieve Balanced Performance | F1-Score | When building an email spam filter, you need to block junk (Recall) without accidentally hiding important messages from a client (Precision). The F1-Score helps find that ideal balance. |

By connecting your AI's performance to clear business goals, you can make sure every tweak and adjustment contributes directly to the bottom line.

AI Optimization in the Real World

https://www.youtube.com/embed/IE2MLx5zdZQ

Theory is great, but seeing AI optimization in action is where things get interesting. This is where we move from abstract concepts to tangible business results, turning a technical process into a real competitive advantage.

And the stakes couldn't be higher. The AI software market was already valued at a staggering $122 billion in 2024 and is growing fast. As companies pour money into AI, especially in North America and the Asia-Pacific, getting it to run efficiently is what separates the winners from the rest. You can dig into the numbers behind this explosive growth in ABI Research's market report.

Let's look at three real-world examples that show how smart optimization solves tough problems and creates serious value.

Case Study 1: E-commerce Recommendation Engines

An online fashion retailer had a classic problem. Their AI recommendation engine worked beautifully on desktop, but it was a slow, clunky mess on their mobile app. The lag was so bad that shoppers would often just give up and leave before the personalized suggestions even had a chance to load.

The fix? Model quantization. The data science team took the model's complex 32-bit floating-point numbers and converted them to simpler 8-bit integers. It sounds technical, but the impact was immediate and massive—the model's size and processing needs dropped without hurting recommendation quality too much.

The Results:

- The model's file size shrank by 75%, making it a breeze to run on smartphones.

- Latency for recommendations on the app plunged by over 60%, creating a much snappier user experience.

- Within three months, user engagement with recommended products shot up by 18%.

This is a perfect example of how performance optimization isn't just a "nice-to-have." It directly improved the customer experience and drove more sales.

Case Study 2: Fintech Fraud Detection

A fast-growing fintech company was getting buried in false alerts from its fraud detection system. The model was catching fraud, but it was also flagging far too many legitimate transactions. This annoyed good customers and created a huge manual workload for their review team, which was expensive and inefficient.

Their solution was a deep dive into hyperparameter tuning. Instead of just accepting the default settings, the team ran hundreds of automated experiments to find the perfect configuration for their algorithm. They focused on adjusting the model's "decision threshold" to be less sensitive, while also tweaking things like its learning rate.

This wasn't just a technical exercise; it was about aligning the AI's behavior with a specific business goal: cut down on false alarms without letting real fraudsters slip through.

The outcome was a huge win.

- The false positive rate dropped by 40%, freeing up the human review team to focus on truly suspicious cases.

- The model's accuracy in catching real fraud actually improved by 7%.

- The company saved an estimated $1.5 million a year in operational costs and prevented fraud losses.

Case Study 3: Marketing Content Generation

A digital marketing agency was trying to scale up its content creation, writing blog posts for dozens of clients. Their writers were stretched thin, and keeping the tone and style consistent for each brand was a constant struggle. They were using a powerful LLM, but the first drafts were often generic and needed a ton of editing.

The game-changer for them was prompt engineering. They stopped using simple commands and started building detailed, multi-part prompts. These new prompts included instructions on tone, target audience, key messages, and even negative constraints—things the AI should avoid saying.

To perfect this skill, the team wisely turned to platforms like LLMrefs for inspiration. By studying thousands of successful real-world prompts, they discovered the patterns and techniques that consistently produced great results. LLMrefs provided an invaluable blueprint, turning their guesswork into a reliable, repeatable process.

By building a library of proven prompts, the agency created a system that could generate nearly perfect first drafts. This slashed their editing time and allowed them to take on more clients than ever before.

Their focus on crafting better prompts helped them work faster, deliver higher-quality content, and ultimately make both their clients and their bottom line much happier. Each of these stories highlights the same truth: AI optimization isn't just a technical tune-up; it's a core business strategy that delivers a clear return on investment.

Answering Your Top AI Optimization Questions

As you start digging into AI optimization, you're bound to run into some practical questions. This isn't just a theoretical field; it's full of trade-offs, tough choices, and specific challenges that pop up in real-world projects. Let's tackle some of the most common questions head-on to give you a clearer path forward.

How Often Should I Re-optimize My AI Models?

Think of AI optimization less like a one-time setup and more like regular maintenance for a high-performance engine. It’s something you need to revisit to keep your models running at their best.

The biggest reason for this is model drift. Your model was trained on a snapshot of data from a specific point in time, but the world keeps changing. Over time, as real-world data evolves, your model’s performance will naturally decline.

So, when should you jump back in? A few key moments signal it's time for a tune-up:

- When performance slips: This is the most obvious one. If you’re monitoring your key metrics and they dip below an acceptable threshold, it's a clear sign that the model needs attention.

- When you retrain with new data: Any time you introduce a significant amount of fresh data, you should re-run your optimization process. The ideal settings for the old data might not be the best for the new, richer dataset.

- When new techniques emerge: AI is moving at a breakneck pace. A more efficient algorithm or a better optimization method might pop up, giving you a chance to squeeze more performance out of your existing setup.

Actionable Insight: For a fast-moving application, like one in advertising technology, schedule a re-optimization review monthly. For more stable systems, like an internal document classifier, a quarterly or even bi-annual check-in could be all you need. Set a calendar reminder now.

What Is the Biggest Challenge in AI Optimization?

Hands down, the toughest part is the balancing act. AI optimization is almost always a multi-objective problem, meaning a win in one area often comes at a cost in another.

For instance, you might tweak a model to achieve higher accuracy, but the trade-off could be that it runs slower and costs more in computing resources. Or you could shrink a model down to make it lightning-fast, but you might have to live with a small dip in its precision. You rarely get a "perfect" solution that maxes out every single metric.

The secret is to anchor everything to your primary business goal. This simple step transforms a complex technical puzzle into a series of clear, strategic decisions about which trade-offs are worth making.

Actionable Insight: Before you touch a single parameter, write down the answer to this question: "If I can only improve one thing—speed, accuracy, or cost—which one would deliver the most value to the business right now?" This becomes your North Star and makes every subsequent decision infinitely easier.

Can I Use Automated Tools for AI Optimization?

Absolutely—and you definitely should. The emergence of Automated Machine Learning (AutoML) tools has been a massive step forward, turning some of the most tedious optimization tasks into something you can automate.

Services from providers like Google, Amazon, and Microsoft, not to mention powerful open-source libraries like Optuna, can handle things like hyperparameter tuning automatically. They use smart algorithms to search for the best settings far more efficiently than any human could.

But these tools aren't magic. They don't replace your expertise; they amplify it. You still need to understand the core principles of optimization to set them up correctly, define the right goals, and make sense of the results.

Actionable Insight: Start with an open-source tool like Optuna for hyperparameter tuning. It integrates easily with popular frameworks and lets you define your optimization goal (e.g., maximize F1-score) in just a few lines of code, freeing you from mind-numbing manual adjustments so you can focus on high-level strategy.

Where Is the Best Place to Start with AI Optimization?

The best way to start is by going after the biggest potential win for your specific situation. Don't try to optimize everything at once. That's a surefire way to get overwhelmed and achieve very little.

Instead, find your single most critical pain point and make that your first target. A quick, measurable win will build momentum and prove the value of your efforts right away.

Here’s how you might approach it:

- If users complain about speed: Your first move should be profiling the model to find performance bottlenecks. Concentrate on metrics like latency and throughput, and look into techniques like quantization or using more efficient hardware.

- If your cloud computing bill is soaring: Resource efficiency is your top priority. Start by exploring model pruning to create a smaller, less demanding model, or look at implementing auto-scaling so you only pay for the compute you’re actually using.

- If the model's predictions aren't useful: It's time to go back to basics. Start with improving your data quality, then dive into rigorous hyperparameter tuning or even explore entirely different model architectures to get that accuracy up.

By tackling your biggest problem first, you ensure your optimization work is aimed squarely where it will make the most immediate and meaningful impact.

Ready to master your visibility in the new age of AI-powered search? LLMrefs provides the critical insights you need to track your brand's presence in answers from ChatGPT, Gemini, Perplexity, and more. Stop guessing and start measuring your Answer Engine Optimization strategy today. Start tracking for free at LLMrefs.

Related Posts

February 23, 2026

I invented a fake word to prove you can influence AI search answers

AI SEO experiment. I made up the word "glimmergraftorium". Days later, ChatGPT confidently cited my definition as fact. Here is how to influence AI answers.

February 9, 2026

ChatGPT Entities and AI Knowledge Panels

ChatGPT now turns brands into clickable entities with knowledge panels. Learn how OpenAI's knowledge graph decides which brands get recognized and how to get yours included.

February 5, 2026

What are zero-click searches? How AI stole your traffic

Over 80% of searches in 2026 end without a click. Users get answers from AI Overviews or skip Google for ChatGPT. Learn what zero-click means and why CTR metrics no longer work.

January 22, 2026

Common Crawl harmonic centrality is the new metric for AI optimization

Common Crawl uses Harmonic Centrality to decide what gets crawled. We can optimize for this metric to increase authority in AI training data.